Copyright

Copyright © 2019 by Janelle Shane

Cover design by Kapo Ng; cover art by Kapo Ng and Janelle Shane

Cover copyright © 2019 by Hachette Book Group, Inc.

Hachette Book Group supports the right to free expression and the value of copyright. The purpose of copyright is to encourage writers and artists to produce the creative works that enrich our culture.

The scanning, uploading, and distribution of this book without permission is a theft of the author’s intellectual property. If you would like permission to use material from the book (other than for review purposes), please contact permissions@hbgusa.com. Thank you for your support of the author’s rights.

Voracious / Little, Brown and Company

Hachette Book Group

1290 Avenue of the Americas, New York, NY 10104

facebook.com/littlebrownandcompany

First ebook edition: November 2019

Voracious is an imprint of Little, Brown and Company, a division of Hachette Book Group, Inc. The Voracious name and logo are trademarks of Hachette Book Group, Inc.

The publisher is not responsible for websites (or their content) that are not owned by the publisher.

The Hachette Speakers Bureau provides a wide range of authors for speaking events. To find out more, go to hachettespeakersbureau.com or call (866) 376-6591.

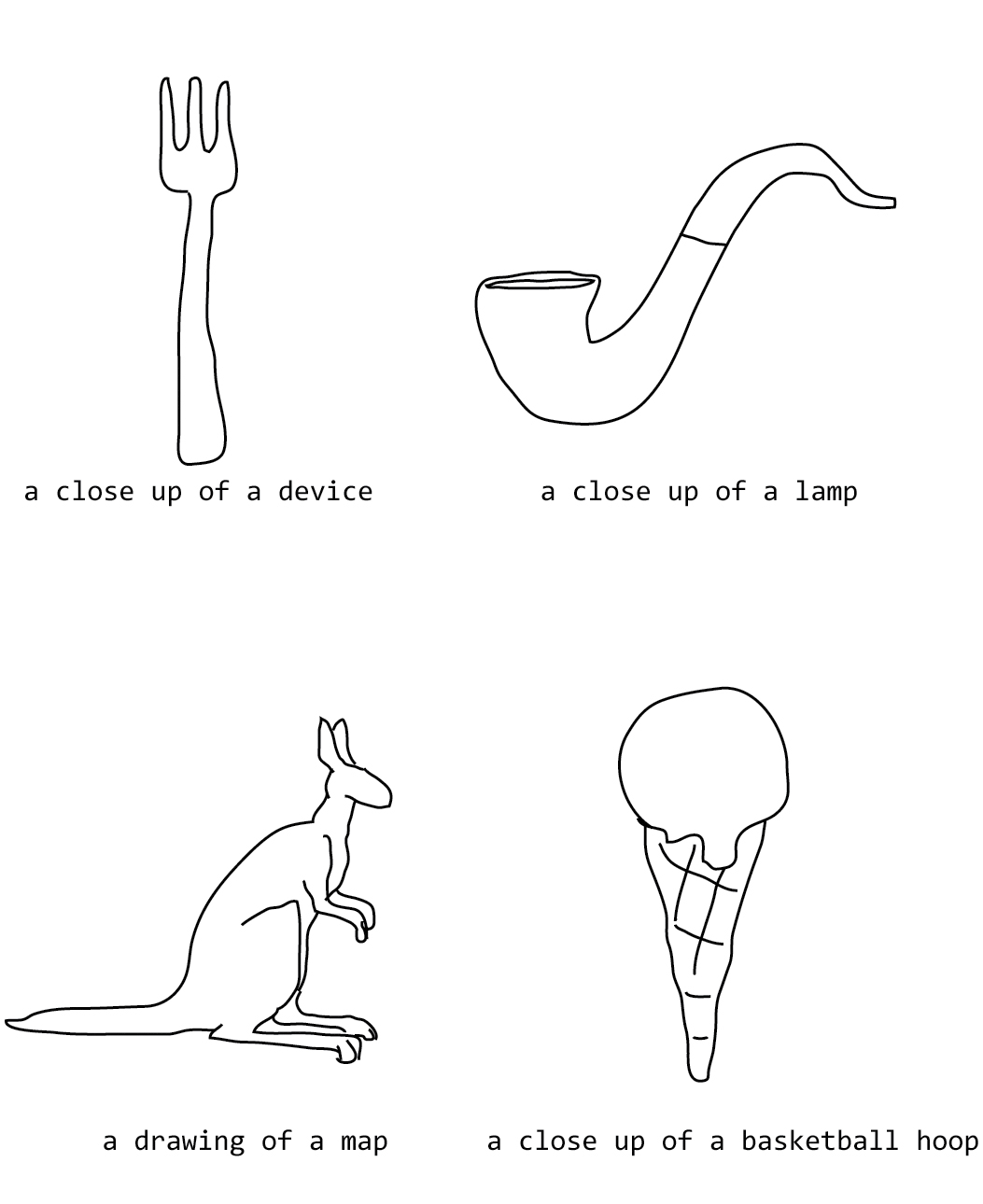

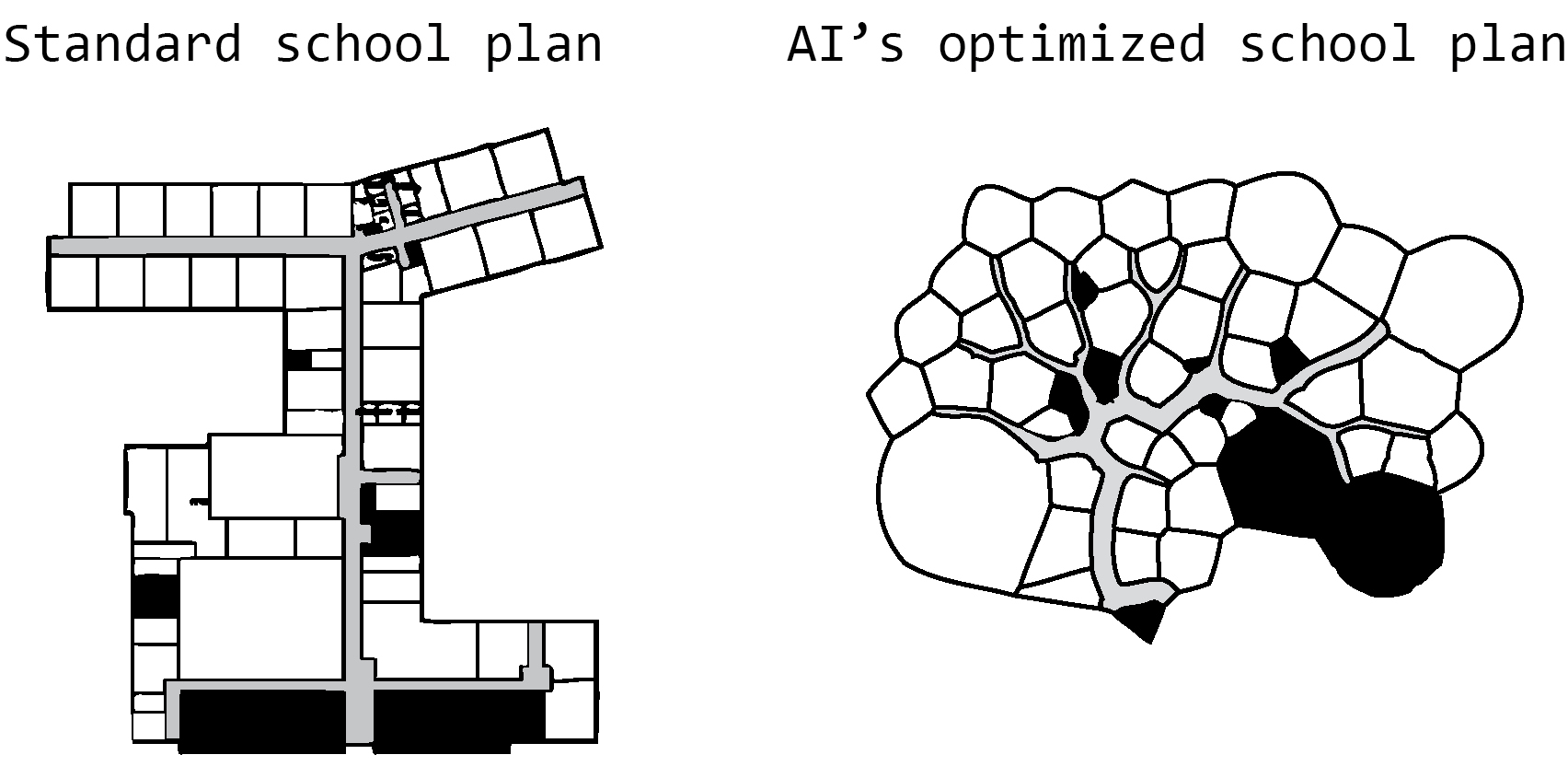

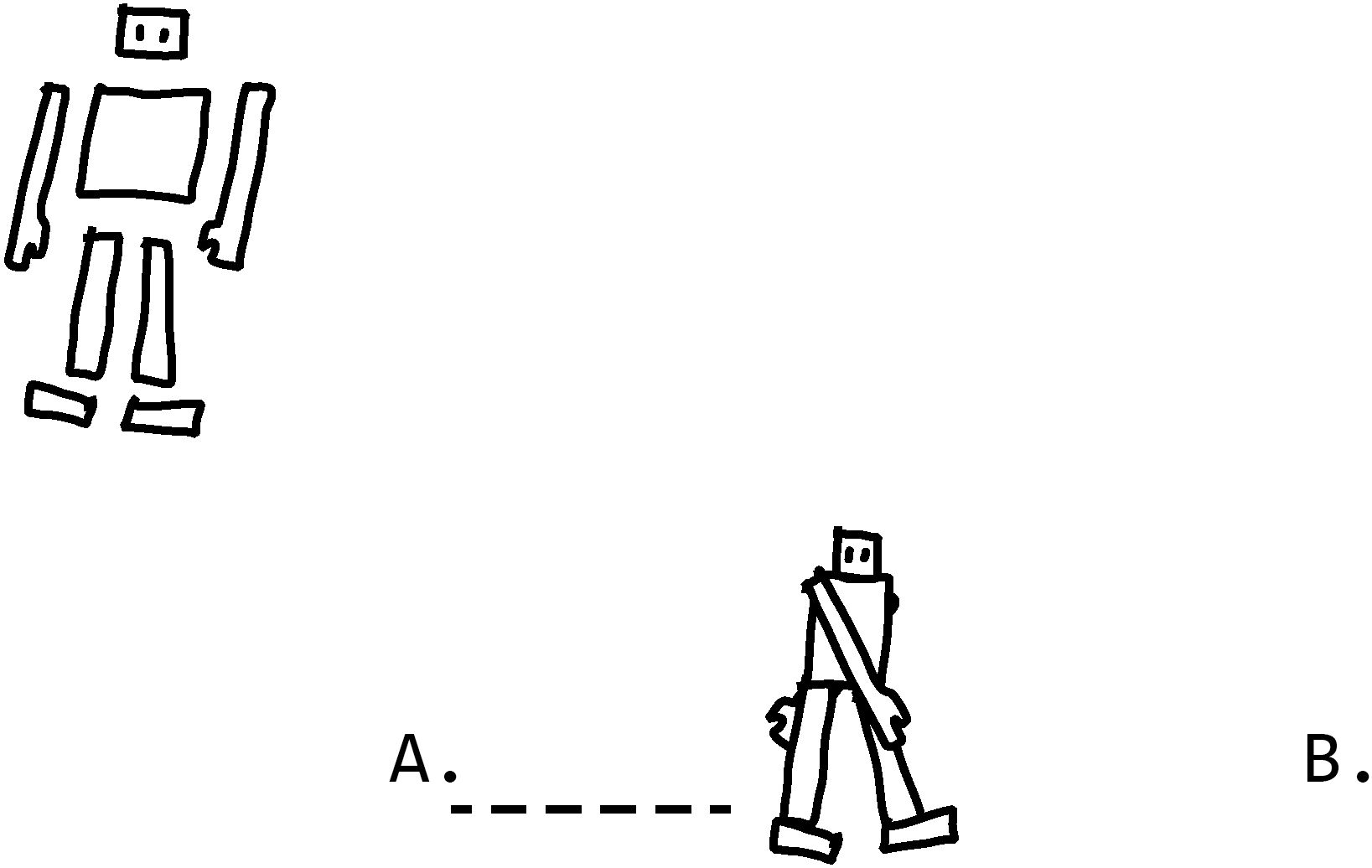

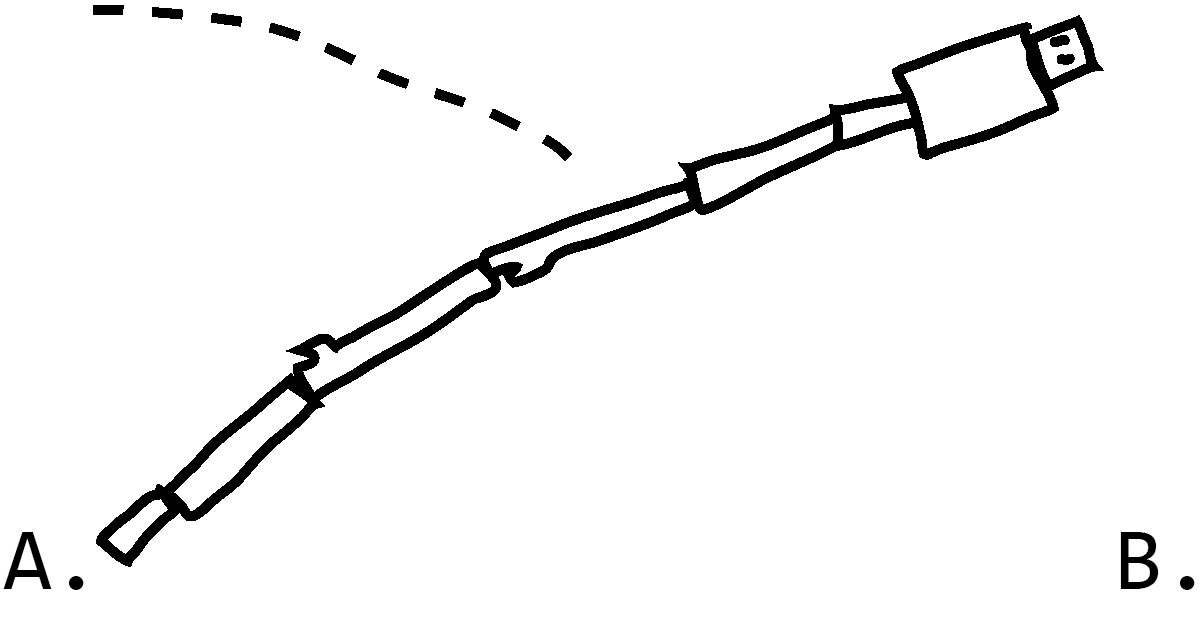

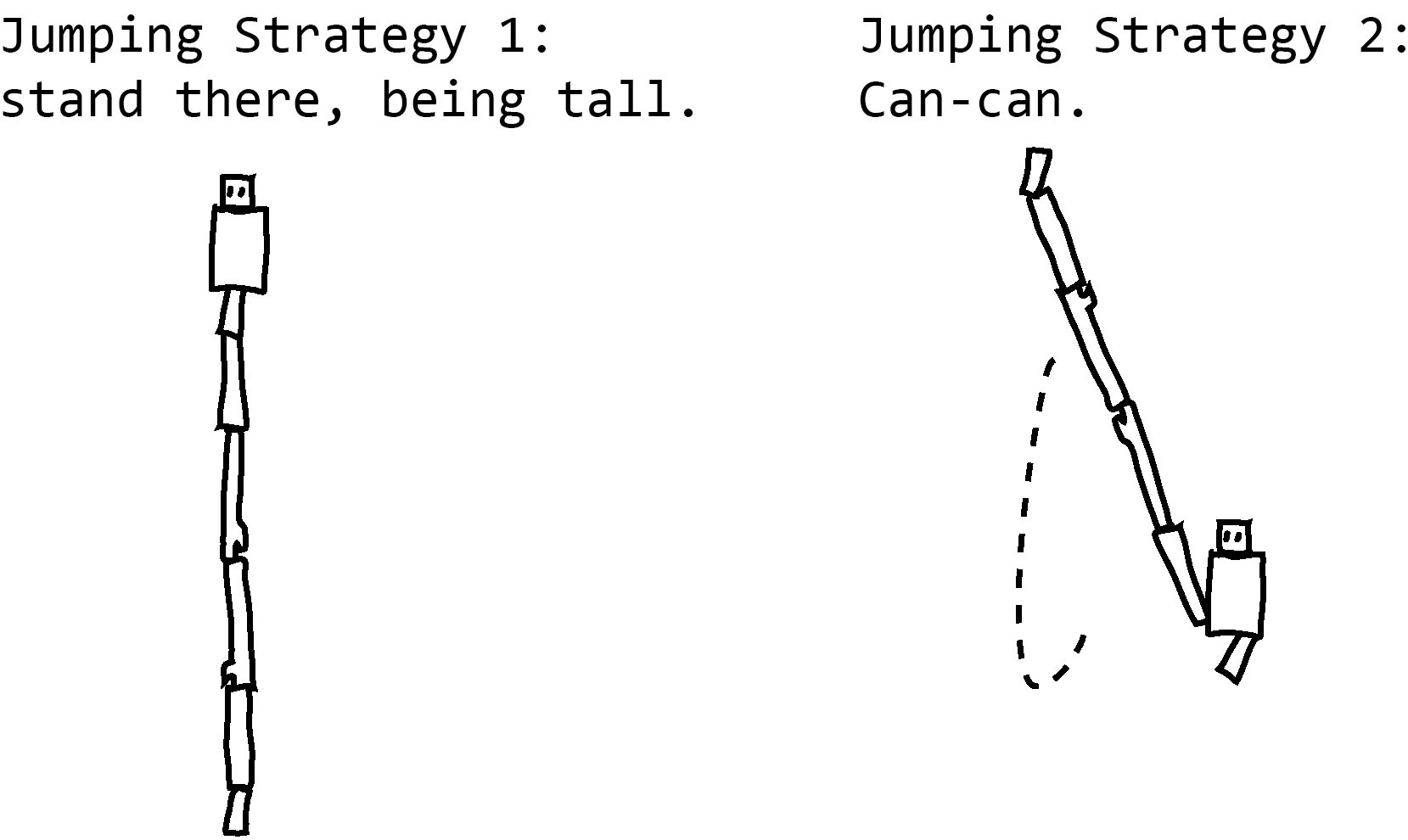

All images created by the author, except the following: GANcats image here made available under Creative Commons BY-NC 4.0 license by NVIDIA Corporation; used here with permission. Quickdraw kangaroos images here made available under Creative Commons BY-4.0 license by Google. School plan images here © by Joel Simon; used with permission. Submarine images here © by Danny Karmon, Yoav Goldberg, and Daniel Zoran; used with permission. Skiers image here © by Andrew Ilyas, Logan Engstrom, Anish Athalye, and Jessy Lin; used with permission.

ISBN 978-0-316-52523-7

E3-20190927-JV-NF-ORI

Explore book giveaways, sneak peeks, deals, and more.

INTRODUCTION

AI is everywhere

Teaching an AI to flirt wasn’t really my kind of project.

To be sure, I’d done a lot of weird AI projects already. On my blog, AI Weirdness, I’d trained an AI to come up with new names for cats—Mr. Tinkles and Retchion were some of its less successful ones—and asked an AI to generate new recipes, some of which would sometimes call for “peeled rosemary” or handfuls of broken glass. But teaching an AI to sweet-talk humans was something else entirely.

AI learns by example—in this case studying a list of existing pickup lines and using them to generate new ones. The problem: the training dataset on my computer screen was a list of pickup lines that I had collected from various internet sources, all of which were awful. They ranged from cheesy bad puns all the way to rude innuendo. Once I’d trained an AI to imitate them, it would be able to produce thousands more at the push of a button. And, like an impressionable child, it wouldn’t know what it should and shouldn’t imitate. The AI would start with a blank slate, knowing nothing about what pickup lines are (or even what English is), and would learn from the examples, doing its best to imitate all the patterns it found. Including the grossness. It wouldn’t know any better.

I considered giving up on the project, but I had a blog post to write, and I had just spent an inconvenient amount of time collecting the example pickup lines. So I started the training. The AI began to look for patterns in the examples, inventing and testing rules that would help it predict which letters should appear in which order in a pickup line. Finally, training was over. With some trepidation, I asked the AI for some lines:

You must be a tringle? Cause you’re the only thing here.

Hey baby, you’re to be a key? Because I can bear your toot?

Are you a candle? Because you’re so hot of the looks with you.

You’re so beautiful that you say a bat on me and baby.

You look like a thing and I love you.

I was surprised and delighted. The AI’s virtual brain (about the same complexity as a worm’s) wasn’t capable of picking up the subtleties of the dataset, including misogyny or cheesiness. It did its best with the patterns it had managed to glean… and arrived at a different, arguably better, solution to the problem of making a stranger smile.

Though to me its lines were a resounding success, the cluelessness of my AI partner may come as a surprise if your knowledge of AI comes from reading news headlines or science fiction. It’s common to see companies claim that AIs are capable of judging the nuances of human language as well as or better than humans can, or that AIs will soon be able to replace humans in most jobs. AI will soon be everywhere, the press releases claim. And they’re both right—and very wrong.

In fact, AI is already everywhere. It shapes your online experience, determining the ads you see and suggesting videos while detecting social media bots and malicious websites. Companies use AI-powered resume scanners to decide which candidates to interview, and they use AI to decide who should be approved for a loan. The AIs in self-driving cars have already driven millions of miles (with the occasional human rescue during moments of confusion). We’ve also put AI to work in our smartphones, recognizing our voice commands, autotagging faces in our photos, and even applying a video filter that makes it look like we have awesome bunny ears.

But we also know from experience that everyday AI is not flawless, not by a long shot. Ad delivery haunts our browsers with endless ads for boots we already bought. Spam filters let the occasional obvious scam through or filter out a crucial email at the most inopportune time.

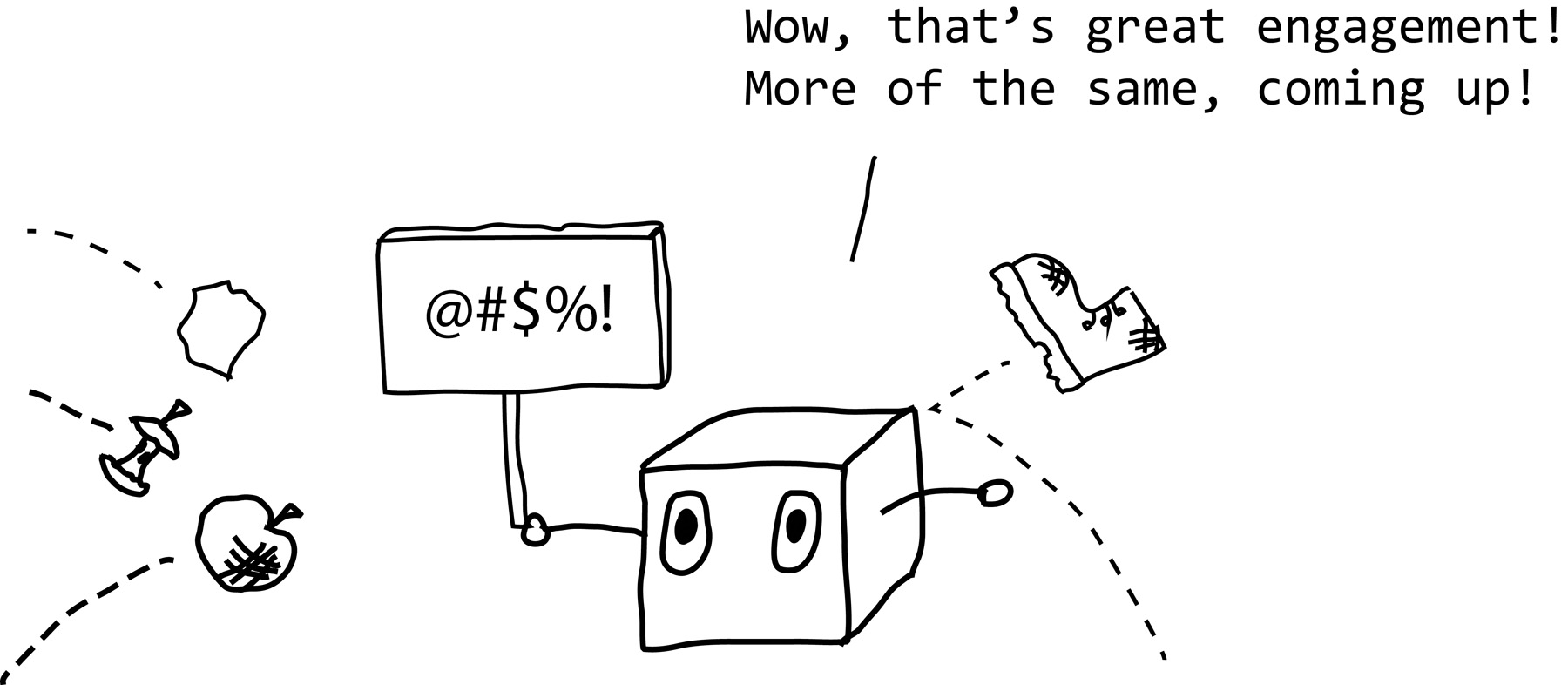

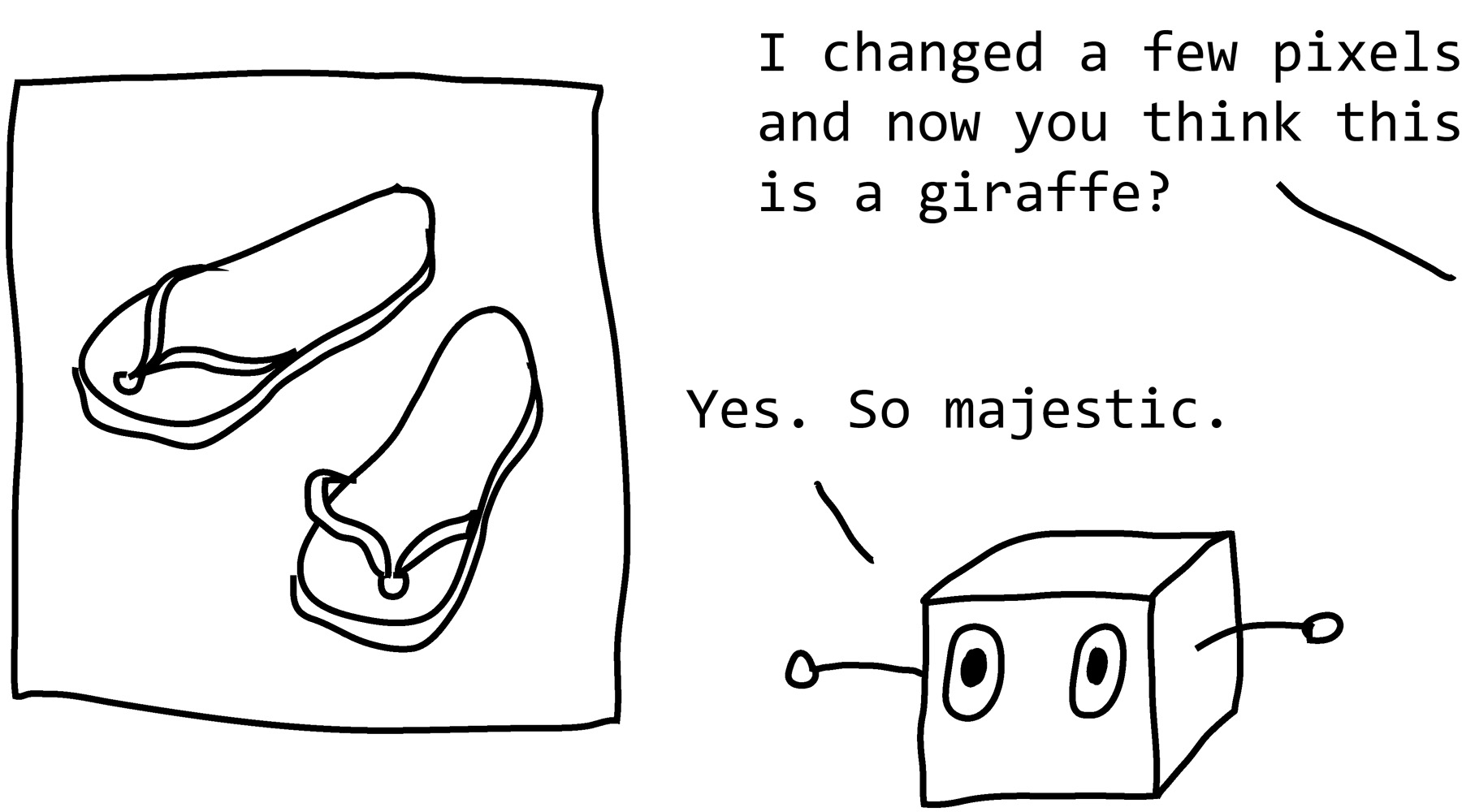

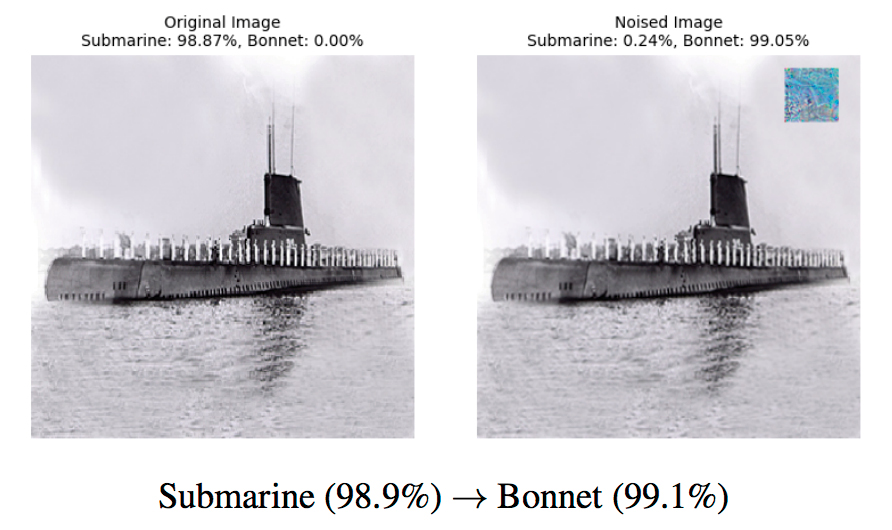

As more of our daily lives are governed by algorithms, the quirks of AI are beginning to have consequences far beyond the merely inconvenient. Recommendation algorithms embedded in YouTube point people toward ever more polarizing content, traveling in a few short clicks from mainstream news to videos by hate groups and conspiracy theorists.1 The algorithms that make decisions about parole, loans, and resume screening are not impartial but can be just as prejudiced as the humans they’re supposed to replace—sometimes even more so. AI-powered surveillance can’t be bribed, but it also can’t raise moral objections to anything it’s asked to do. It can also make mistakes when it’s misused—or even when it’s hacked. Researchers have discovered that something as seemingly insignificant as a small sticker can make an image recognition AI think a gun is a toaster, and a low-security fingerprint reader can be fooled more than 77 percent of the time with a single master fingerprint.

People often sell AI as more capable than it actually is, claiming that their AI can do things that are solidly in the realm of science fiction. Others advertise their AI as impartial even while its behavior is measurably biased. And often what people claim as AI performance is actually the work of humans behind the curtain. As consumers and citizens of this planet, we need to avoid being duped. We need to understand how our data is being used and understand what the AI we’re using really is—and isn’t.

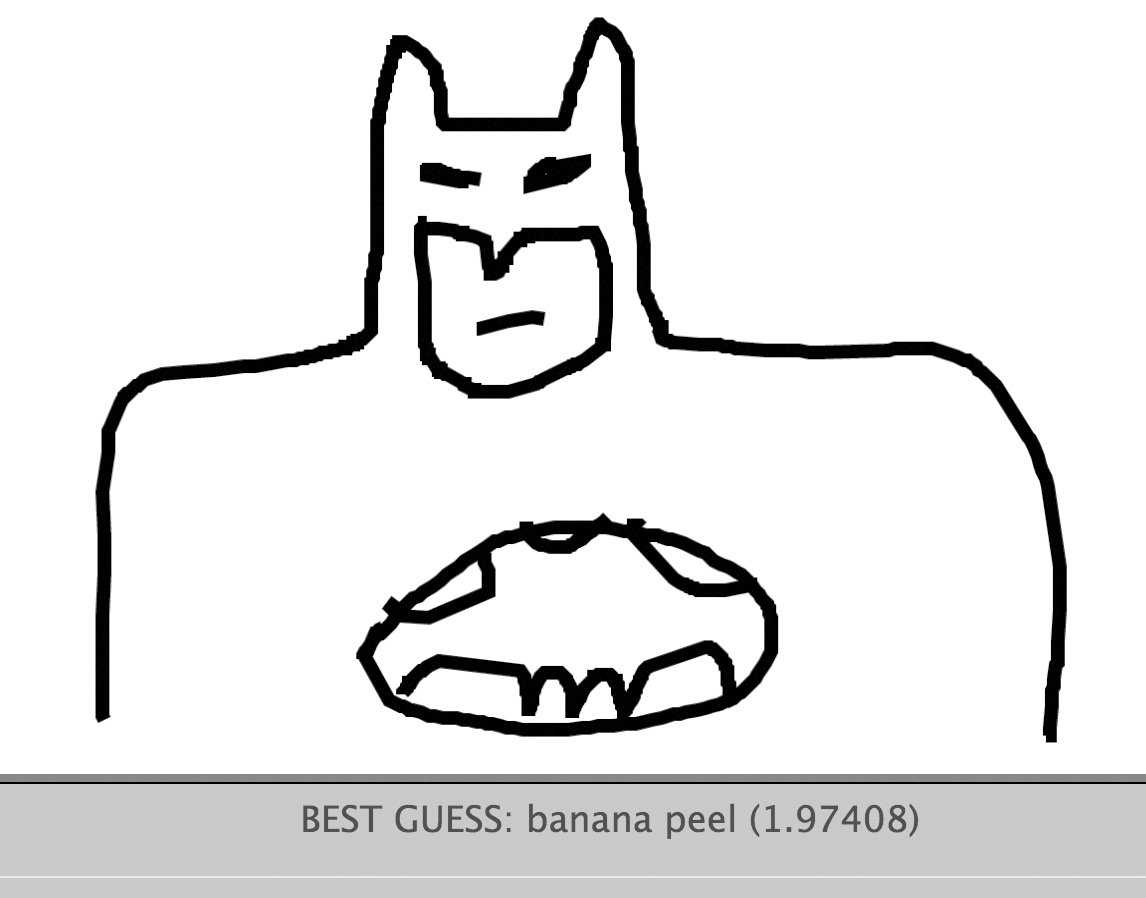

On AI Weirdness, I spend my time doing fun experiments with AI. Sometimes this means giving AIs unusual things to imitate—like those pickup lines. Other times, I see if I can take them out of their comfort zones—like the time I showed an image recognition algorithm a picture of Darth Vader and simply asked it what it saw: it declared that Darth Vader was a tree and then proceeded to argue with me about it. From my experiments, I’ve found that even the most straightforward task can cause an AI to fail, as if you’d played a practical joke on it. But it turns out that pranking an AI—giving it a task and watching it flail—is a great way to learn about it.

In fact, as we’ll see in this book, the inner workings of AI algorithms are often so strange and tangled that looking at an AI’s output can be one of the only tools we have for discovering what it understood and what it got terribly wrong. When you ask an AI to draw a cat or write a joke, its mistakes are the same sorts of mistakes it makes when processing fingerprints or sorting medical images, except it’s glaringly obvious that something’s gone wrong when the cat has six legs and the joke has no punchline. Plus, it’s really hilarious.

In the course of my attempts to take AIs out of their comfort zone and into ours, I’ve asked AIs to write the first line of a novel, recognize sheep in unusual places, write recipes, name guinea pigs, and generally be very weird. But from these experiments, you can learn a lot about what AI’s good at and what it struggles to do—and what it likely won’t be capable of doing in my lifetime or yours.

Here’s what I’ve learned:

The Five Principles of AI Weirdness:

• The danger of AI is not that it’s too smart but that it’s not smart enough.

• AI has the approximate brainpower of a worm.

• AI does not really understand the problem you want it to solve.

• But: AI will do exactly what you tell it to. Or at least it will try its best.

• And AI will take the path of least resistance.

So let’s enter the strange world of AI. We’ll learn what AI is—and what it isn’t. We’ll learn what it’s good at and where it’s doomed to fail. We’ll learn why the AIs of the future might look less like C-3PO than like a swarm of insects. We’ll learn why a self-driving car would be a terrible getaway vehicle during a zombie apocalypse. We’ll learn why you should never volunteer to test a sandwich-sorting AI, and we’ll encounter walking AIs that would rather do anything but walk. And through it all we’ll learn how AI works, how it thinks, and why it’s making the world a weirder place.

CHAPTER 1

What is AI?

If it seems like AI is everywhere, it’s partly because “artificial intelligence” means lots of things, depending on whether you’re reading science fiction or selling a new app or doing academic research. When someone says they have an AI-powered chatbot, should we expect it to have opinions and feelings like the fictional C-3PO? Or is it just an algorithm that learned to guess how humans are likely to respond to a given phrase? Or a spreadsheet that matches words in your question with a library of preformulated answers? Or an underpaid human who types all the answers from some remote location? Or—even—a completely scripted conversation where human and AI are reading human-written lines like characters in a play? Confusingly, at various times, all these have been referred to as AI.

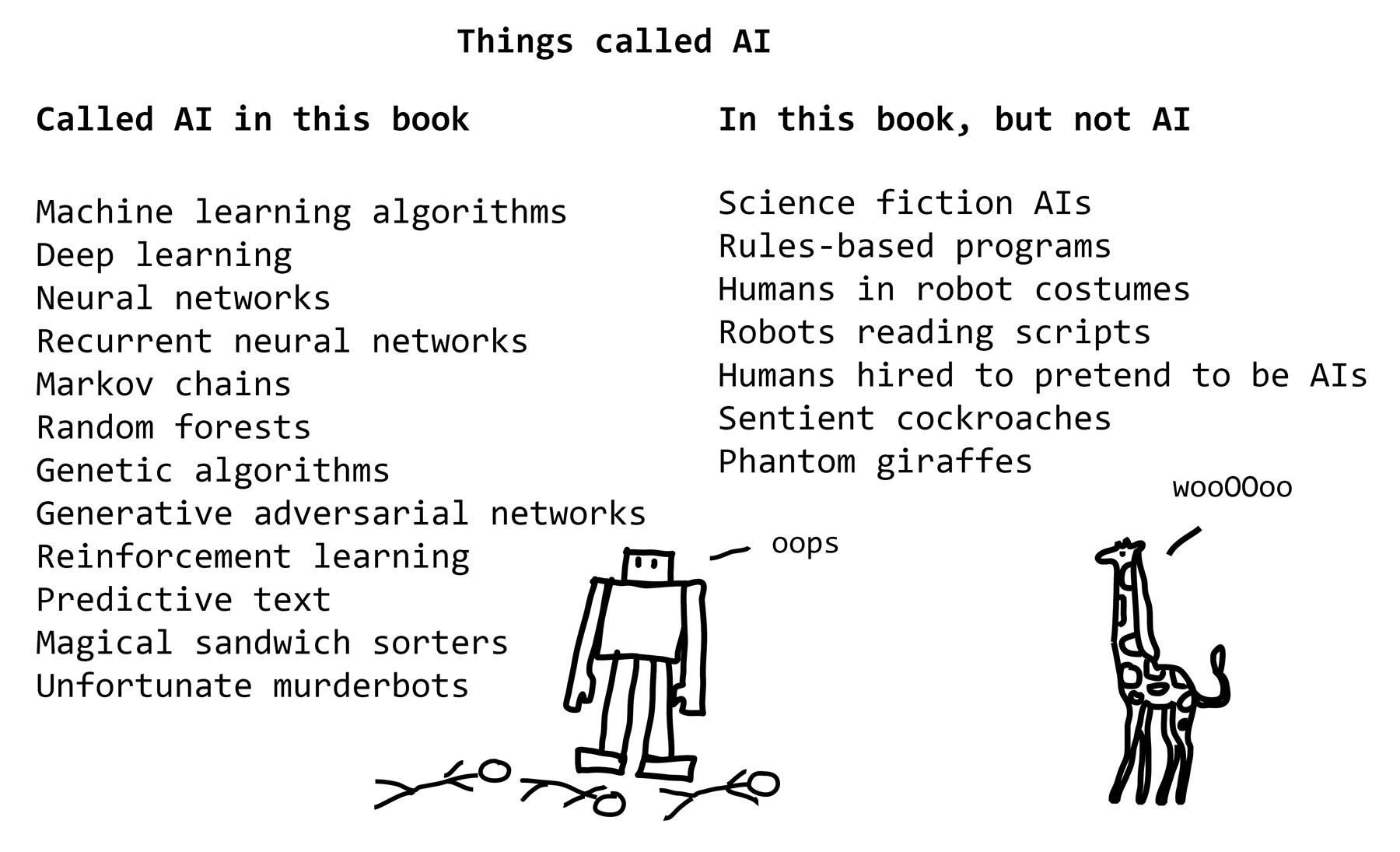

For the purposes of this book, I’ll use the term AI the way it’s mostly used by programmers today: to refer to a particular style of computer program called a machine learning algorithm. This chart shows a bunch of the terms I’ll be covering in this book and where they fall according to this definition.

Everything that I’m calling “AI” in this book is also a machine learning algorithm—let’s talk about what that is.

KNOCK, KNOCK, WHO’S THERE?

To spot an AI in the wild, it’s important to know the difference between machine learning algorithms (what we’re calling AI in this book) and traditional (what programmers call rules-based) programs. If you’ve ever done basic programming, or even used HTML to design a website, you’ve used a rules-based program. You create a list of commands, or rules, in a language the computer can understand, and the computer does exactly what you say. To solve a problem with a rules-based program, you have to know every step required to complete the program’s task and how to describe each one of those steps.

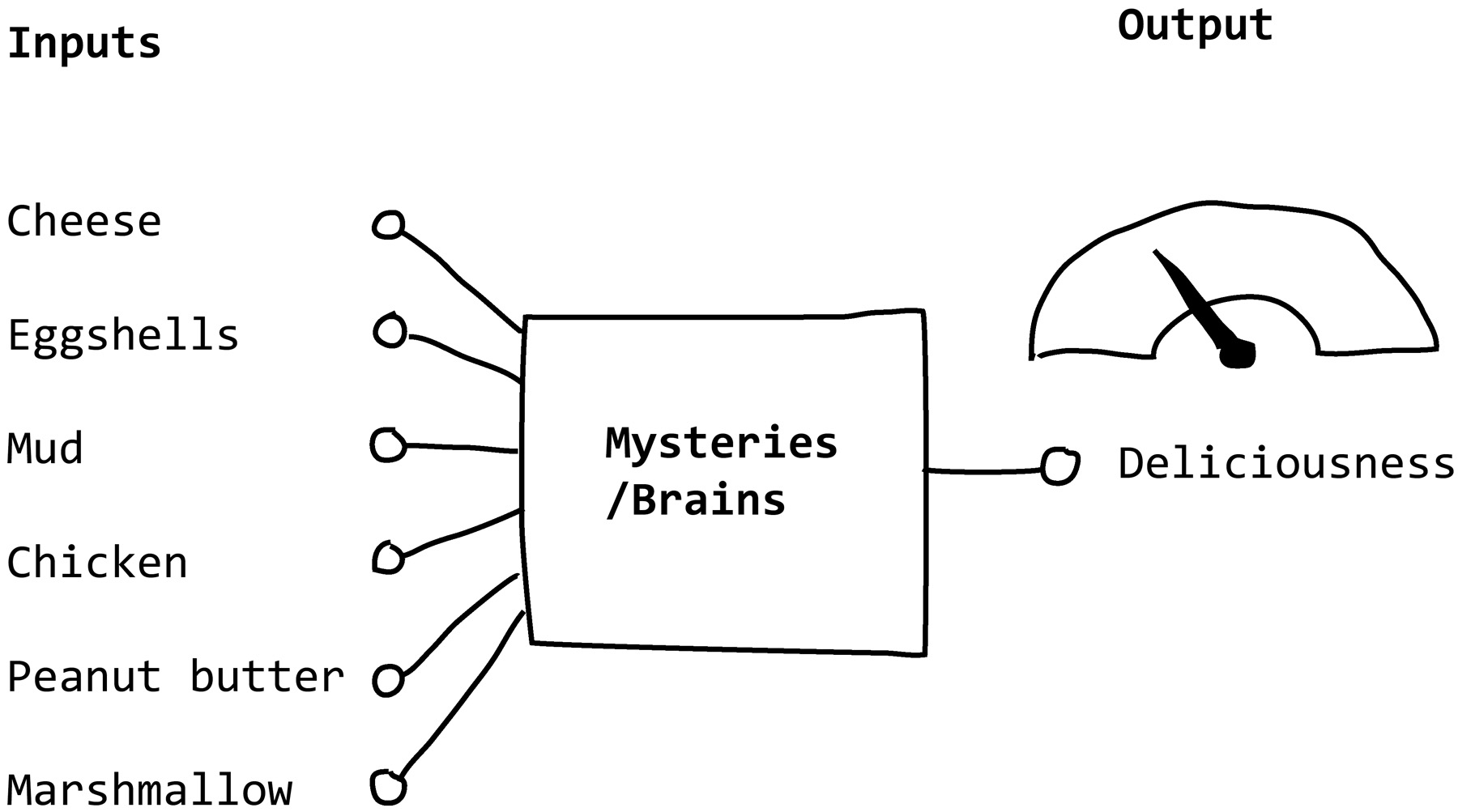

But a machine learning algorithm figures out the rules for itself via trial and error, gauging its success on goals the programmer has specified. The goal could be a list of examples to imitate, a game score to increase, or anything else. As the AI tries to reach this goal, it can discover rules and correlations that the programmer didn’t even know existed. Programming an AI is almost more like teaching a child than programming a computer.

Rules-based programming

Let’s say I wanted to use the more familiar rules-based programming to teach a computer to tell knock-knock jokes. The first thing I’d do is figure out all the rules. I’d analyze the structure of knock-knock jokes and discover that there’s an underlying formula, as follows:

Knock, knock.

Who’s there?

[Name]

[Name] who?

[Name] [Punchline]

Once I set this formula in stone, there are only two slots free that the program can control: [Name] and [Punchline]. Now the problem is reduced to just generating these two items. But I still need rules for generating them.

I could set up a list of valid names and a list of valid punchlines, as follows:

Names: Lettuce

Punchlines: in, it’s cold out here!

Names: Harry

Punchlines: up, it’s cold out here!

Names: Dozen

Punchlines: anybody want to let me in?

Names: Orange

Punchlines: you going to let me in?

Now the computer can produce knock-knock jokes by choosing a name–punchline pair from the list and slotting it into the template. This doesn’t create new knock-knock jokes but only gives me jokes I already know. I might try making things interesting by allowing [it’s cold out here!] to be replaced with a few different phrases: [I’m being attacked by eels!] and [lest I transform into an unspeakable eldritch horror]. Then the program can generate a new joke:

Knock, knock.

Who’s there?

Harry.

Harry who?

Harry up, I’m being attacked by eels!

I could replace [eels] with [an angry bee] or [a manta ray] or any number of things. Then I can get the computer to generate even more new jokes. With enough rules, I could potentially generate hundreds of jokes.

Depending on the level of sophistication I’m going for, I could spend a lot of time coming up with more advanced rules. I could find a list of existing puns and figure out a way to transform them into punchline format. I could even try programming in pronunciation rules, rhymes, semihomophones, cultural references, and so forth in an attempt to get the computer to recombine them into interesting puns. If I’m clever about it, I can get the program to generate new puns that nobody’s ever thought of. (Although one person who tried this discovered that the algorithm’s list of sayings contained words and phrases that were so old or obscure that almost nobody could understand its jokes.) No matter how sophisticated my joke-making rules get, though, I’m still telling the computer exactly how to solve the problem.

Training AI

But when I train AI to tell knock-knock jokes, I don’t make the rules. The AI has to figure out those rules on its own.

The only thing I give it is a set of existing knock-knock jokes and instructions that are essentially, “Here are some jokes; try to make more of these.” And the materials I give it to work with? A bucket of random letters and punctuation.

Then I leave to get coffee.

The AI gets to work.

The first thing it does is try to guess a few letters of a few knock-knock jokes. It’s guessing 100 percent randomly at this point, so this first guess could be anything. Let’s say it guesses something like “qasdnw,m sne?mso d.” As far is it knows, this is how you tell a knock-knock joke.

Then the AI looks at what those knock-knock jokes are actually supposed to be. Chances are it’s very wrong. “All right,” says the AI, and it subtly adjusts its own structure so that it will guess a little more accurately next time. There’s a limit to how drastically it can change itself, because we don’t want it to try to memorize every new chunk of text it sees. But with a minimum of tweaking, the AI can discover that if it guesses nothing but k’s and spaces, it will at least be right some of the time. After looking at one batch of knock-knock jokes and making one round of corrections, its idea of a knock-knock joke looks something like this:

kk

kk k kk

keokk k

k

k

Now, it’s not the world’s greatest knock-knock joke. But with this as a starting point, the AI can move on to a second batch of knock-knock jokes, then another. Each time, it adjusts its joke formula to improve its guesses.

After a few more rounds of guessing and self-adjusting, it has learned more rules. It has learned to employ the occasional question mark at the end of a line. It is beginning to learn to use vowels (o in particular). It’s even making an attempt at using apostrophes.

noo,

Lnoc noo

Kor?

hnos h nc

pt’b oa to’

How well did its rules about generating knock-knock jokes match reality? It still seems to be missing something.

If it wants to get closer to generating a passable knock-knock joke, it’s going to have to figure out some rules about which order the letters come in. Again, it starts by guessing. The guess that o is always followed by q? Not so great, it turns out. But then it guesses that o is often followed by ck. Gold. It has made some progress. Behold its idea of the perfect joke:

Whock

Whock

Whock

Whock

Whock Whock Whock

Whock Whock

Whock

Whock

It’s not quite a knock-knock joke—it sounds more like some kind of chicken. The AI’s going to need to figure out some more rules.

It looks at the dataset again. It tries new ways to use “ock,” looking for new combinations that match its example knock-knock jokes better.

nooc hock hork

aKoo kWhoc

Khock

hors Whnes Whee

noo oooc

Koo?

noc no’c Wno h orea

ao no oo k KeockWnork Koos

Thoe

nock K ock

Koc

hoo

KKock Knock Thock Whonk

All this progress happens in just a few minutes. By the time I return with my coffee, the AI has already discovered that starting with “Knock Knock / Who’s There?” fits the existing knock-knock jokes really, really well. It decides to apply this rule always.

But the rest of the formula takes quite a while to figure out, and the AI frequently devolves into playing a game of telephone with itself—or acting out a robot version of the “Who’s on First?” comedy routine:

Knock Knock

Who’s There?

Iane

Aatar who?

Aaane who?

Aan who?

Anac who?

Iobe who?

Irata who?

Soon it figures out the rest of the formula, but no actual, you know, words:

It also sometimes gets a bit carried away with the length of the punchline.

Knock Knock

Who’s There?

Cheed

I?len who?

Ita gee the lasd emotory oud ane weonges oak anet ane tee soore theis onithit the where your thasg tate tine if thele ter the theung!

At some point in its training, it learns the joke about the Cow with No Lips, which becomes its Favorite Thing Ever. If you’re not familiar, the joke about the Cow with No Lips goes something like:

Knock, knock.

Who’s there?

A cow with no lips.

A cow with no lips who?

A cow with no lips says ooo ooo ooooooo.

According to the AI, this is comedy gold. So the AI spends a while thinking that the punchline to Every Single Joke is “ooo ooo ooooooo”…

Knock Knock

Who’s There?

Alec

Anic who?

Wow co poo the tho tho door to the soout oo ooo oooo ooo oo oo oo oo ooo oo oo oo ooo ooo oooo ooo ooo oo oo ooo ooo ooo ooo ooo oo oo ooo ooo ooo ooo oo oo oo ooo ooo ooo ooo…

It has discovered a rule, all right. Eventually it (reluctantly, I assume) tones down how often it uses the Cow with No Lips rule to make punchlines. But it still has problems that are remarkably similar to two people telling knock-knock jokes over a very bad phone connection, maybe forgetting who was actually telling the joke:

Knock Knock

Who’s There?

Alther

Water who?

Water who who?

Who who?

Arton and out on the moor?

At last it figures out the structure of a knock-knock joke and proceeds to make jokes that, while technically correct, don’t make all that much sense. Many of them are partially plagiarized from jokes in the training dataset.

Knock Knock

Who’s There?

Robin

Robin who?

Robin you! Git me and I’m leaving

Ireland

Ireland who?

Ireland you money, butt.

And then. It produced. An actual joke. That it had composed entirely on its own, without plagiarizing from the dataset, and that was not only intelligible but also actually… funny?

Knock Knock

Who’s There?

Alec

Alec who?

Alec-Knock Knock jokes.

Did the AI suddenly begin to understand knock-knock jokes and English-language puns? Almost definitely not, given the very small size of the dataset. But the freedom that the AI had—free rein over the entire set of possible characters—allowed it to try new combinations of sounds, one of which ended up actually working. So more of a victory for the infinite monkey theory* than a proof of concept for the next AI-only comedy club.

JUST LET THE AI FIGURE IT OUT

Given a set of knock-knock jokes and no further instruction, the AI was able to discover a lot of the rules that I would have otherwise had to manually program into it. Some of its rules I would never have thought to program in or wouldn’t even have known existed—such as The Cow with No Lips Is the Best Joke.

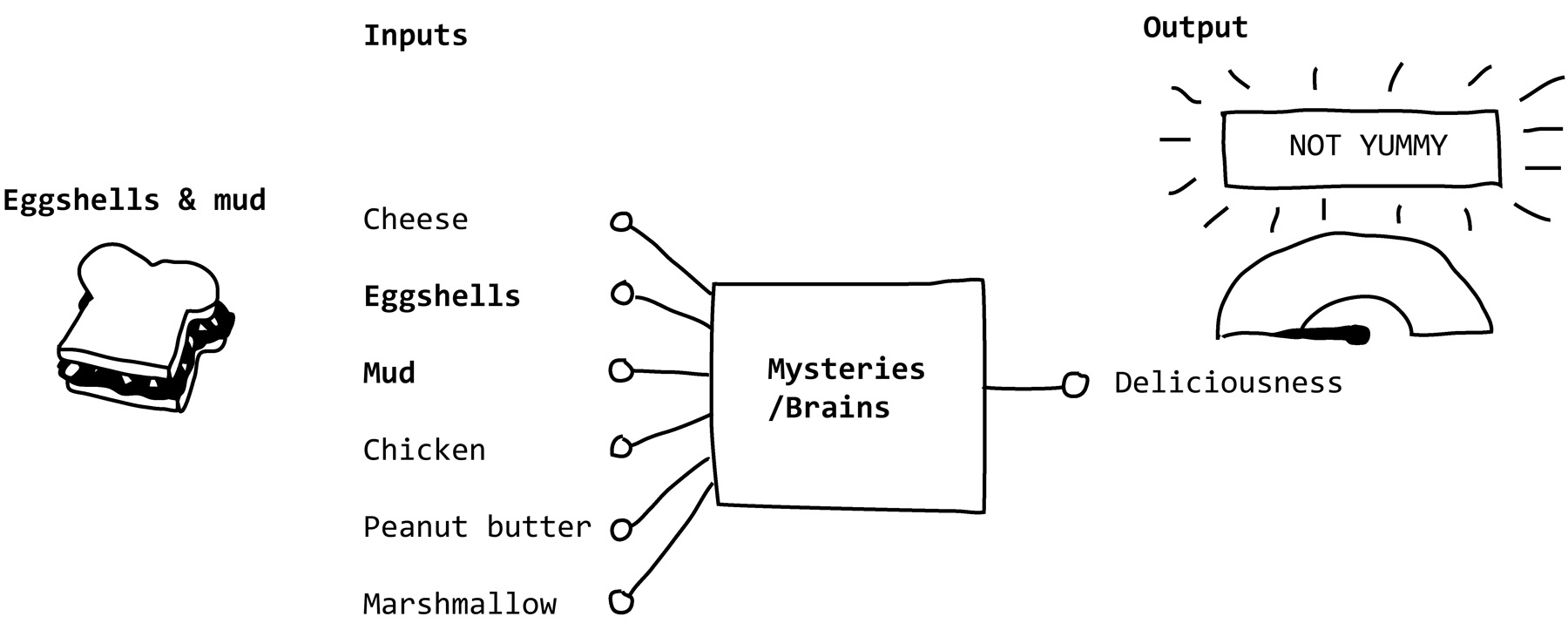

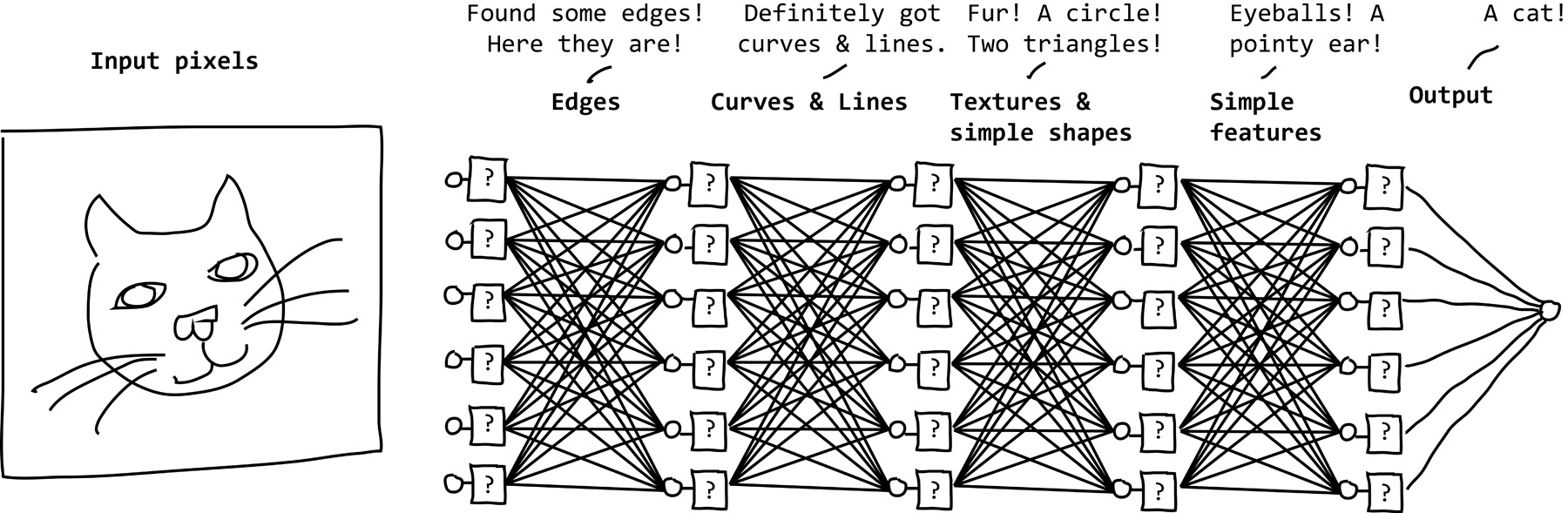

This is part of what makes AIs attractive problem solvers, and is particularly handy if the rules are really complicated or just plain mysterious. For example, AI is often used for image recognition, a surprisingly complicated task that’s difficult to do with an ordinary computer program. Although most of us are easily able to identify a cat in a picture, it’s really hard to come up with the rules that define a cat. Do we tell the program that a cat has two eyes, a nose, two ears, and a tail? That also describes a mouse and a giraffe. And what if the cat is curled up or facing away? Even writing down the rules for detecting a single eye is tricky. But an AI can look at tens of thousands of images of cats and come up with rules that correctly identify a cat most of the time.

AI is also great at strategy games like chess, for which we know how to describe all possible moves but not how to write a formula that tells us what the best next move is. In chess, the sheer number of possible moves and complexity of game play means that even a grandmaster would be unable to come up with hard-and-fast rules governing the best move in any given situation. But an algorithm can play a bunch of practice games against itself—millions of them, more than even the most dedicated grandmaster—to come up with rules that help it win. And since the AI learned without explicit instruction, sometimes its strategies are very unconventional. Sometimes a little too unconventional.

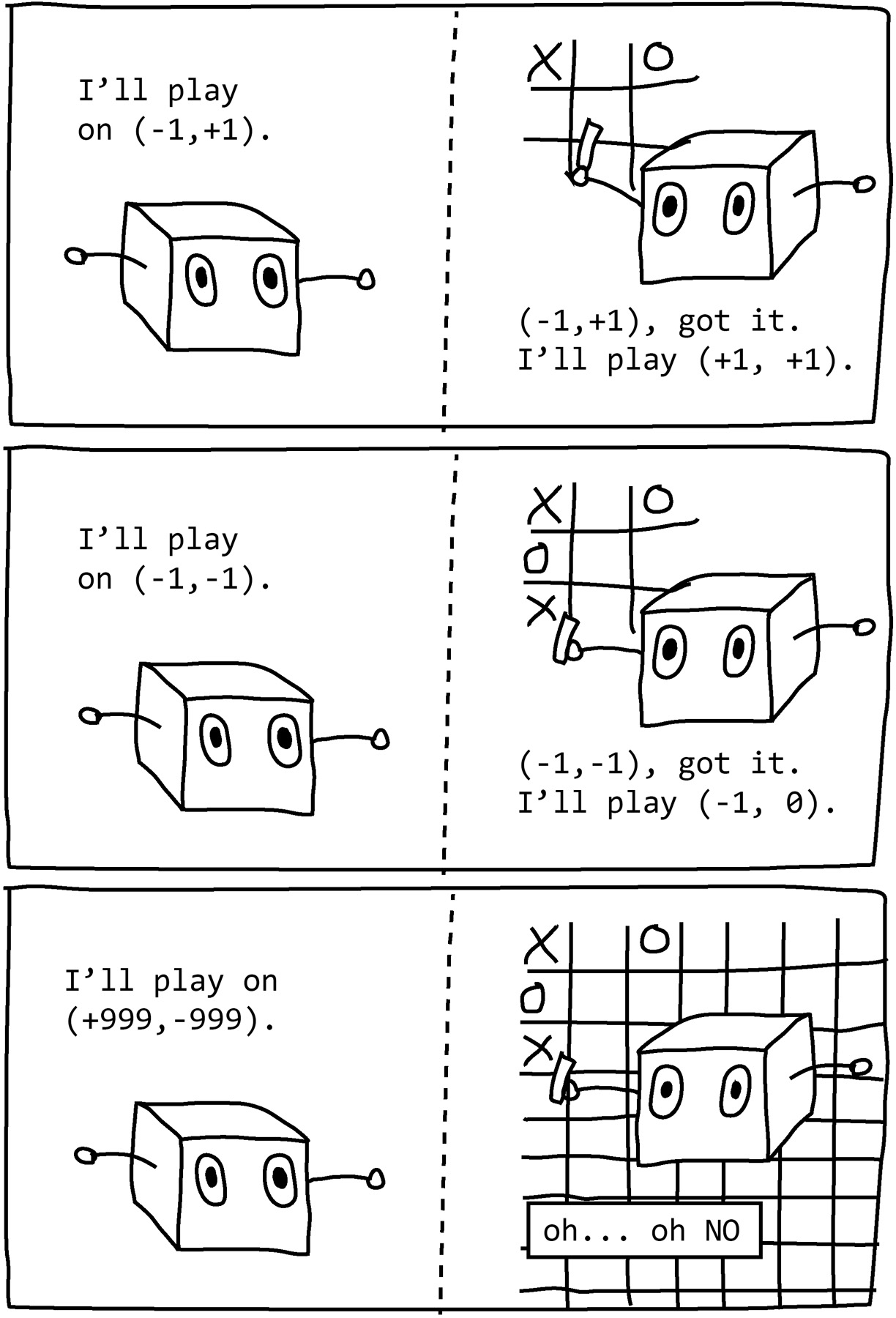

If you don’t tell AI which moves are valid, it may find and exploit strange loopholes that completely break your game. For example, in 1997 some programmers built algorithms that could play tic-tac-toe remotely against each other on an infinitely large board. One programmer, rather than designing a rules-based strategy, built an AI that could evolve its own approach. Surprisingly, the AI suddenly began winning all its games. It turned out that the AI’s strategy was to place its move very, very far away, so that when its opponent’s computer tried to simulate the new, greatly expanded board, the effort would cause it to run out of memory and crash, forfeiting the game.1 Most AI programmers have stories like this—times when their algorithms surprised them by coming up with solutions they hadn’t expected. Sometimes these new solutions are ingenious, and sometimes they’re a problem.

At its most basic, all AI needs is a goal and a set of data to learn from and it’s off to the races, whether the goal is to copy examples of loan decisions a human made or predict whether a customer will buy a certain sock or maximize the score in a video game or maximize the distance a robot can travel. In every scenario, AI uses trial and error to invent rules that will help it reach its goal.

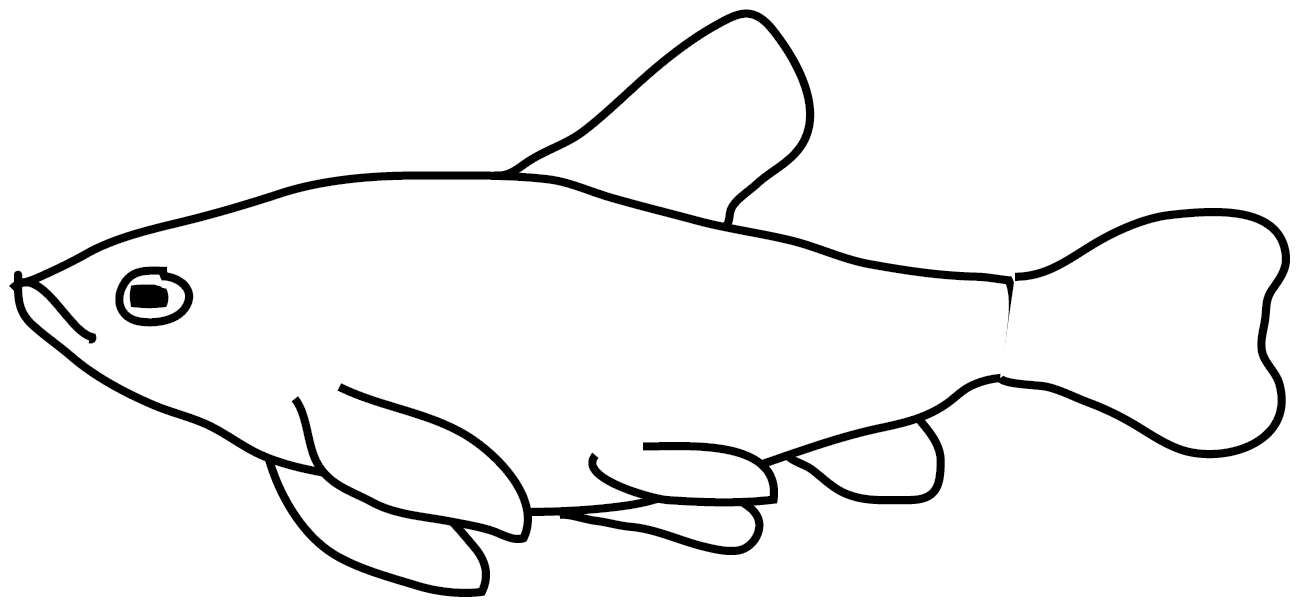

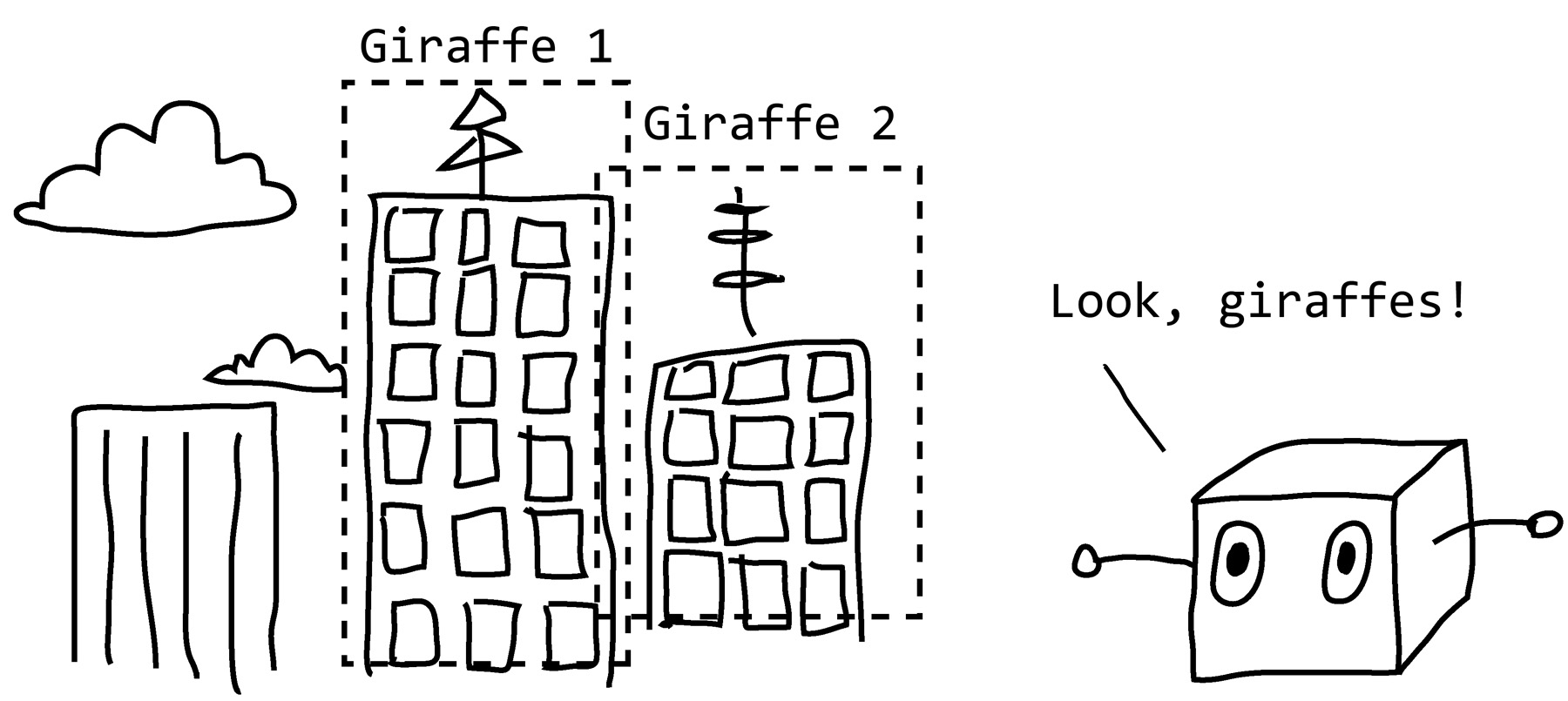

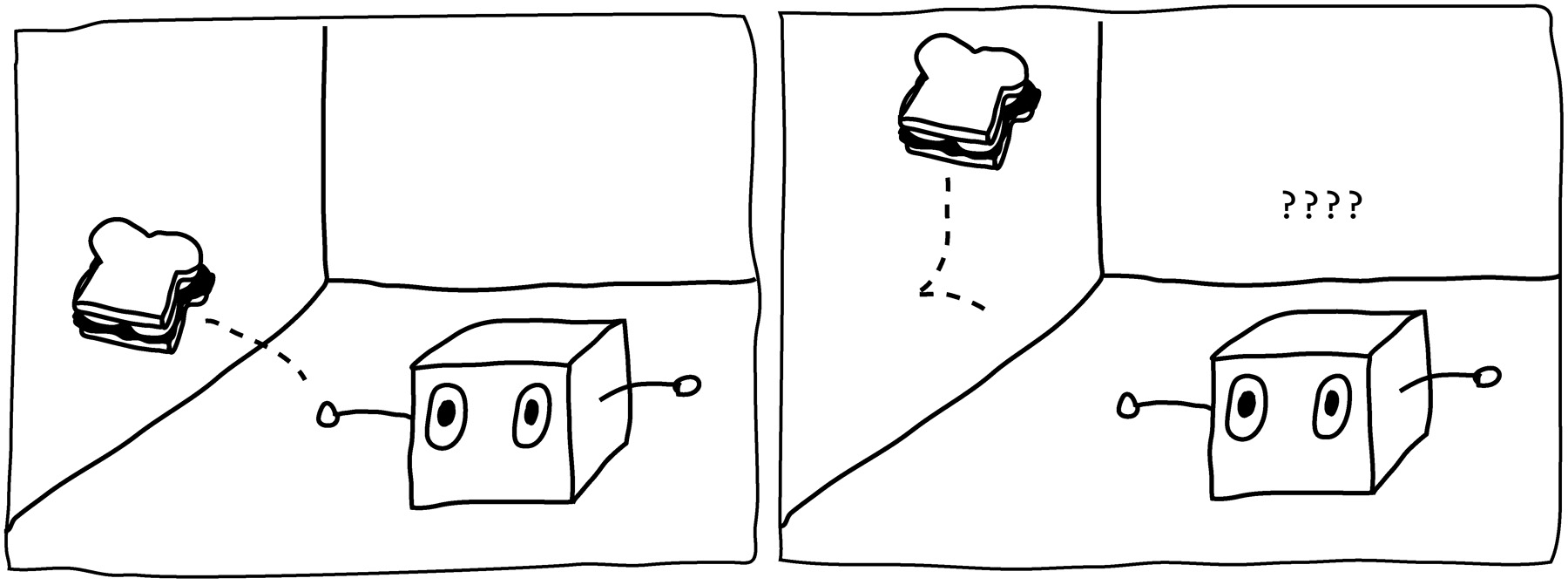

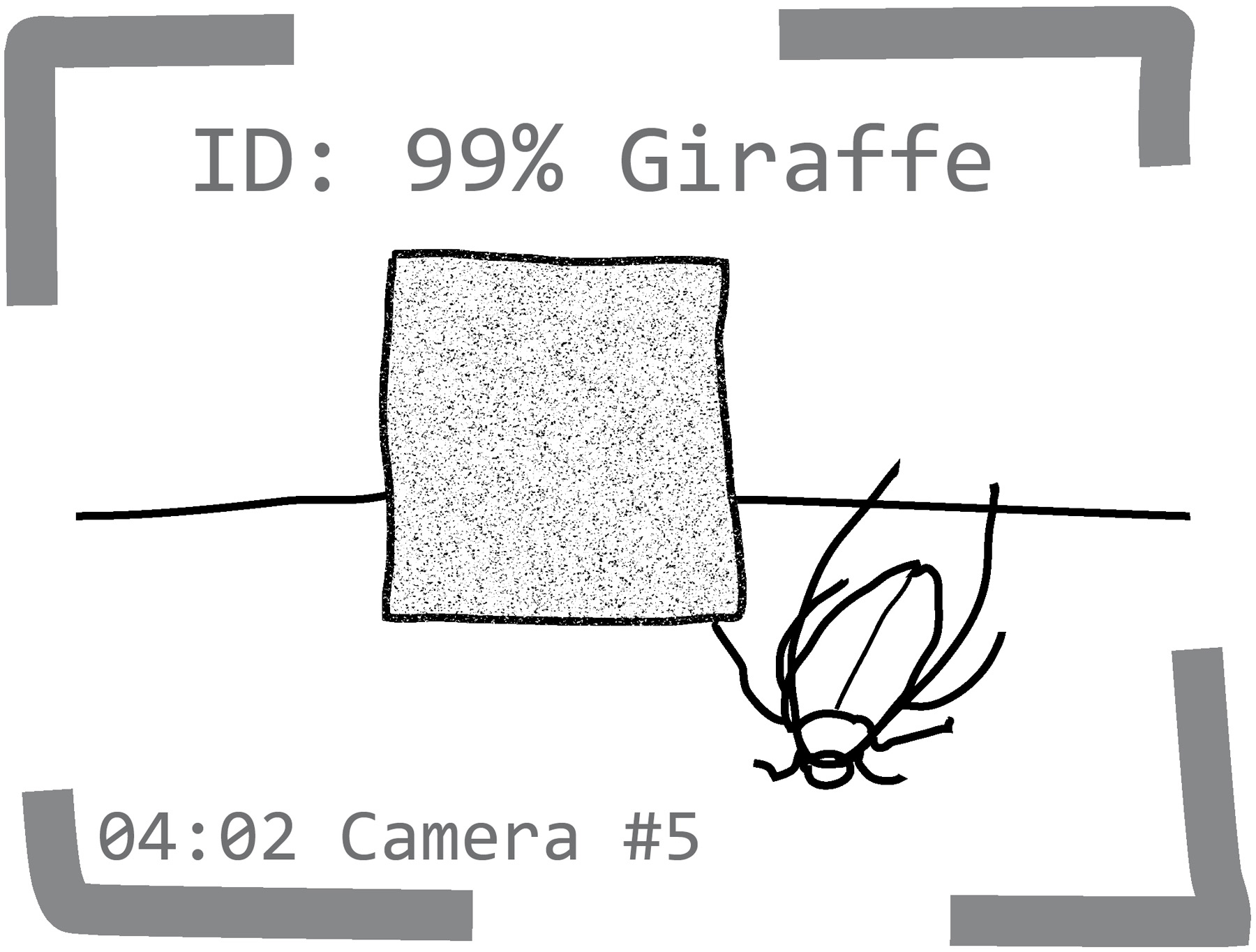

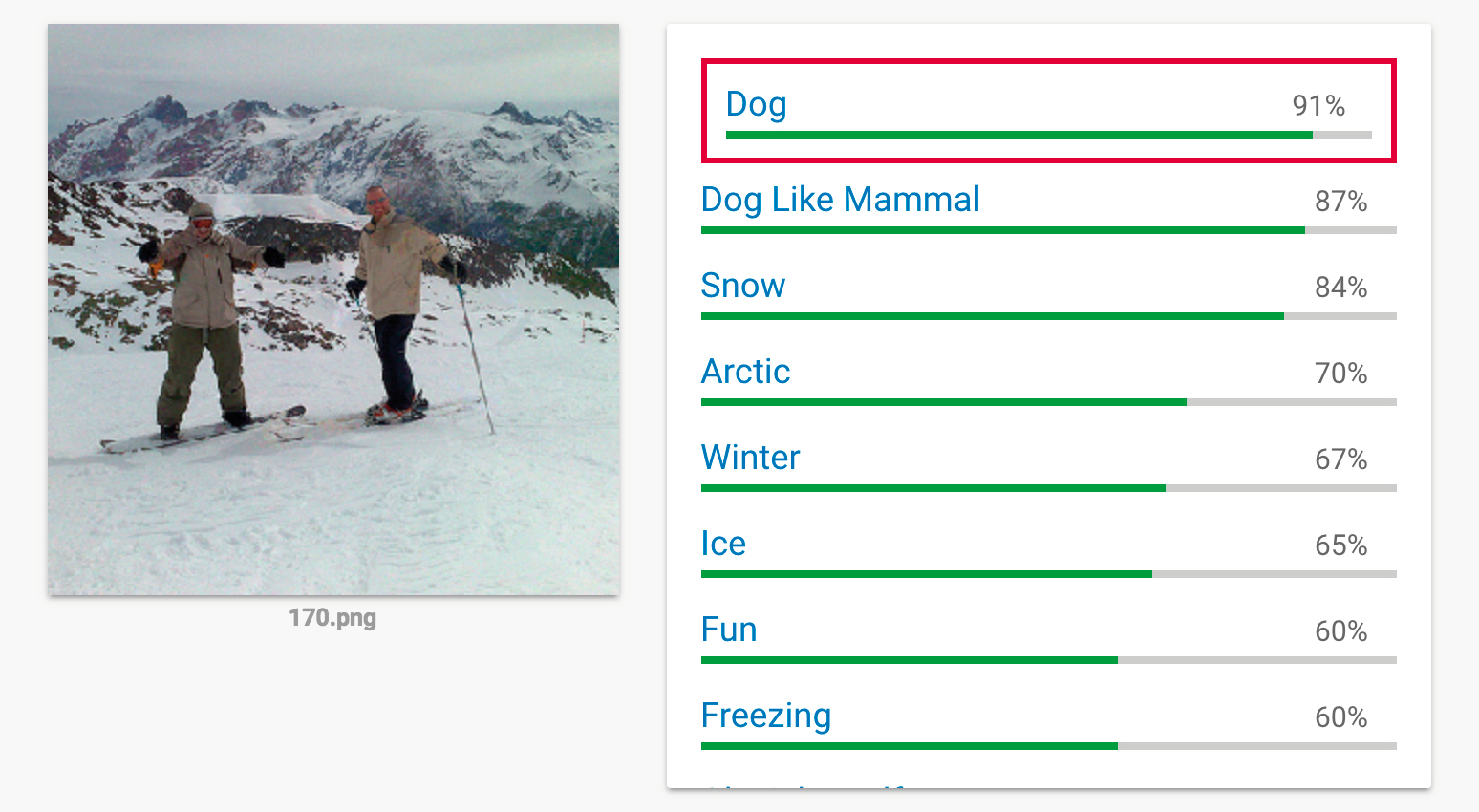

SOMETIMES ITS RULES ARE BAD

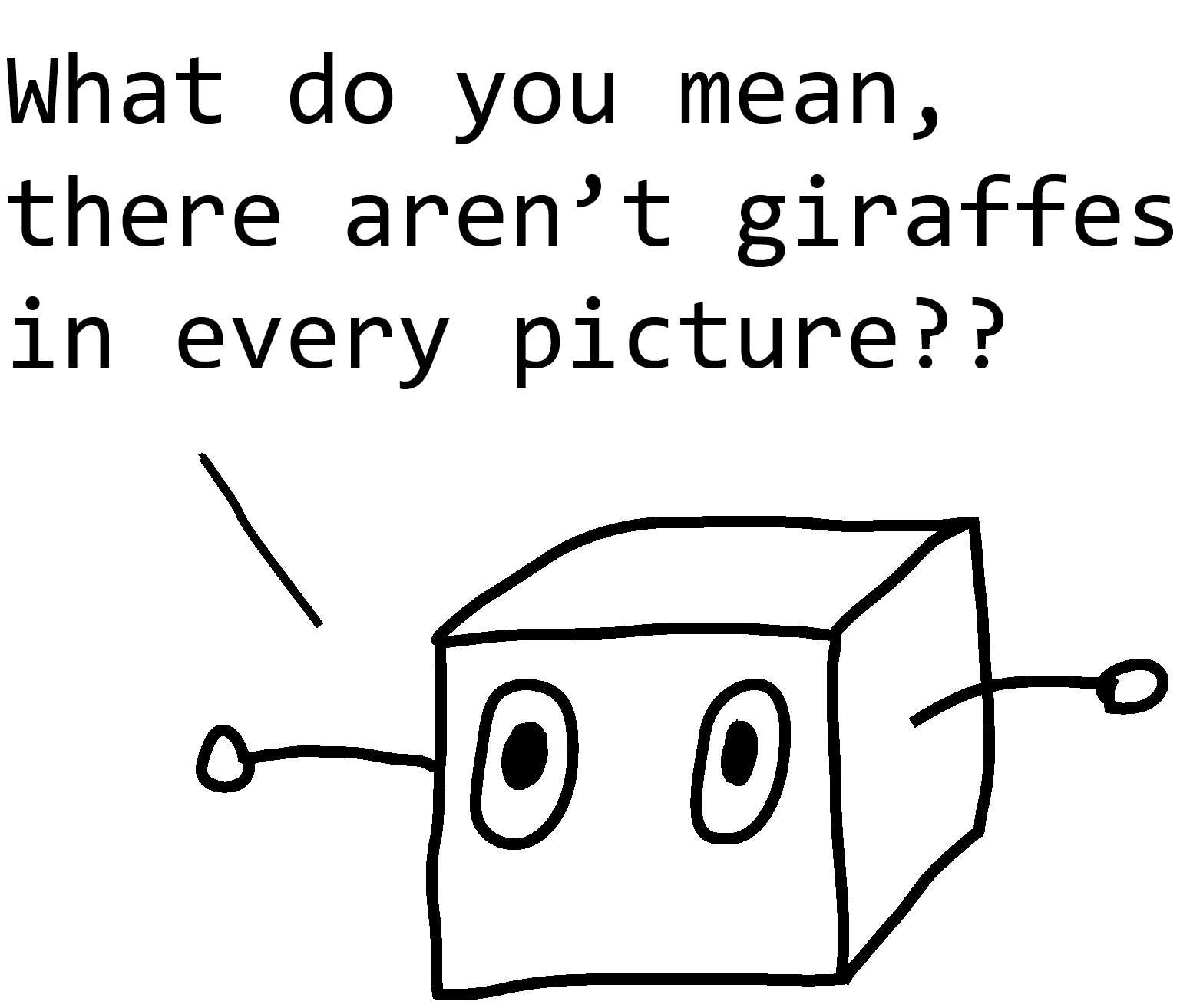

Sometimes, an AI’s brilliant problem-solving rules actually rely on mistaken assumptions. For example, some of my weirdest AI experiments have involved Microsoft’s image recognition product, which allows you to submit any image for the AI to tag and caption. Generally, this algorithm gets things right—identifying clouds, subway trains, and even a kid doing some sweet skateboarding tricks. But one day I noticed something odd about its results: it was tagging sheep in pictures that definitely did not contain any sheep. When I investigated further, I discovered that it tended to see sheep in landscapes that had lush green fields—whether or not the sheep were actually there. Why the persistent—and specific—error? Maybe during training this AI had mostly been shown sheep that were in fields of this sort and had failed to realize that the “sheep” caption referred to the animals, not to the grassy landscape. In other words, the AI had been looking at the wrong thing. And sure enough, when I showed it examples of sheep that were not in lush green fields, it tended to get confused. If I showed it pictures of sheep in cars, it would tend to label them as dogs or cats instead. Sheep in living rooms also got labeled as dogs and cats, as did sheep held in people’s arms. And sheep on leashes were identified as dogs. The AI also had similar problems with goats—when they climbed into trees, as they sometimes do, the algorithm thought they were giraffes (and another similar algorithm called them birds).

Although I couldn’t know for sure, I could guess that the AI had come up with rules like Green Grass = Sheep, and Fur in Cars or Kitchens = Cats. These rules had served it well in training but failed when it encountered the real world and its dizzying variety of sheep-related situations.

Training errors like these are common with image recognition AIs. But the consequences of these mistakes can be serious. A team at Stanford University once trained an AI to tell the difference between pictures of healthy skin and pictures of skin cancer. After the researchers trained their AI, however, they discovered that they had inadvertently trained a ruler detector instead—many of the tumors in their training data had been photographed next to rulers for scale.2

HOW TO DETECT A BAD RULE

It’s often not that easy to tell when AIs make mistakes. Since we don’t write their rules, they come up with their own, and they don’t write them down or explain them the way a human would. Instead, the AIs make complex interdependent adjustments to their own internal structures, turning a generic framework into something that’s fine-tuned for an individual task. It’s like starting with a kitchen full of generic ingredients and ending with cookies. The rules might be stored in the connections between virtual brain cells or in the genes of a virtual organism. The rules might be complex, spread out, and weirdly entangled with one another. Studying an AI’s internal structure can be a lot like studying the brain or an ecosystem—and you don’t need to be a neuroscientist or an ecologist to know how complex those can be.

Researchers are working on finding out just how AIs make decisions, but in general, it’s hard to discover what an AI’s internal rules actually are. Often it’s merely because the rules are hard to understand, but at other times, especially when it comes to commercial and/or government algorithms, it’s because the algorithm itself is proprietary. So unfortunately, problems often turn up in the algorithm’s results when it’s already in use, sometimes making decisions that can affect lives and potentially cause real harm.

For example, an AI that was being used to recommend which prisoners would be paroled was found to be making prejudiced decisions, unknowingly copying the racist behaviors it found in its training.3 Even without understanding what bias is, AI can still manage to be biased. After all, many AIs learn by copying humans. The question they’re answering is not “What is the best solution?” but “What would the humans have done?”

Systematically testing for bias can help catch some of these common problems before they do damage. But another piece of the puzzle is learning to anticipate problems before they occur and designing AIs to avoid them.

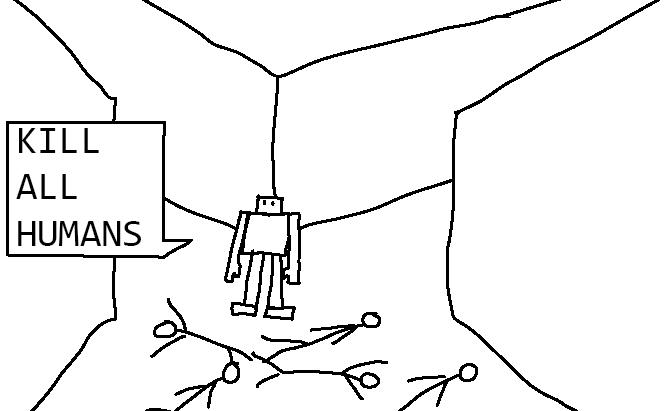

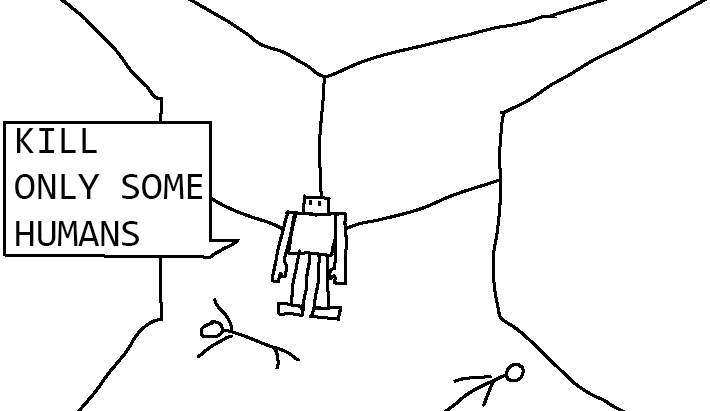

FOUR SIGNS OF AI DOOM

When people think of AI disaster, they think of AIs refusing orders, deciding that their best interests lie in killing all humans, or creating terminator bots. But all those disaster scenarios assume a level of critical thinking and a humanlike understanding of the world that AIs won’t be capable of for the foreseeable future. As leading machine learning researcher Andrew Ng put it, worrying about an AI takeover is like worrying about overcrowding on Mars.4

That’s not to say that today’s AIs can’t cause problems. From slightly annoying their programmers all the way to perpetuating prejudices or crashing a self-driving car, today’s AIs are not exactly harmless. But by knowing a little about AI, we can see some of these problems coming.

Here’s how an AI disaster might actually play out today.

Let’s say a Silicon Valley startup is offering to save companies time by screening job candidates, identifying the likely top performers by analyzing short video interviews. This could be attractive—companies spend a lot of time and resources interviewing dozens of candidates just to find that one good match. Software never gets tired and never gets hangry, and it doesn’t hold personal grudges. But what are the warning signs that what the company is building is actually an AI disaster?

Warning sign number 1: The Problem Is Too Hard

The thing about hiring good people is that it’s really difficult. Even humans have trouble identifying good candidates. Is this candidate genuinely excited to work here or just a good actor? Have we accounted for disability or differences in culture? When you add AI to the mix, it gets even more difficult. It’s nearly impossible for AI to understand the nuances of jokes or tone or cultural references. And what if a candidate makes a reference to the day’s current events? If the AI was trained on data collected the previous year, it won’t have a chance of understanding—and it might punish the candidate for saying something it finds nonsensical. To do the job well, the AI will have to have a huge range of skills and keep track of a large amount of information. If it isn’t capable of doing the job well, we’re in for some kind of failure.

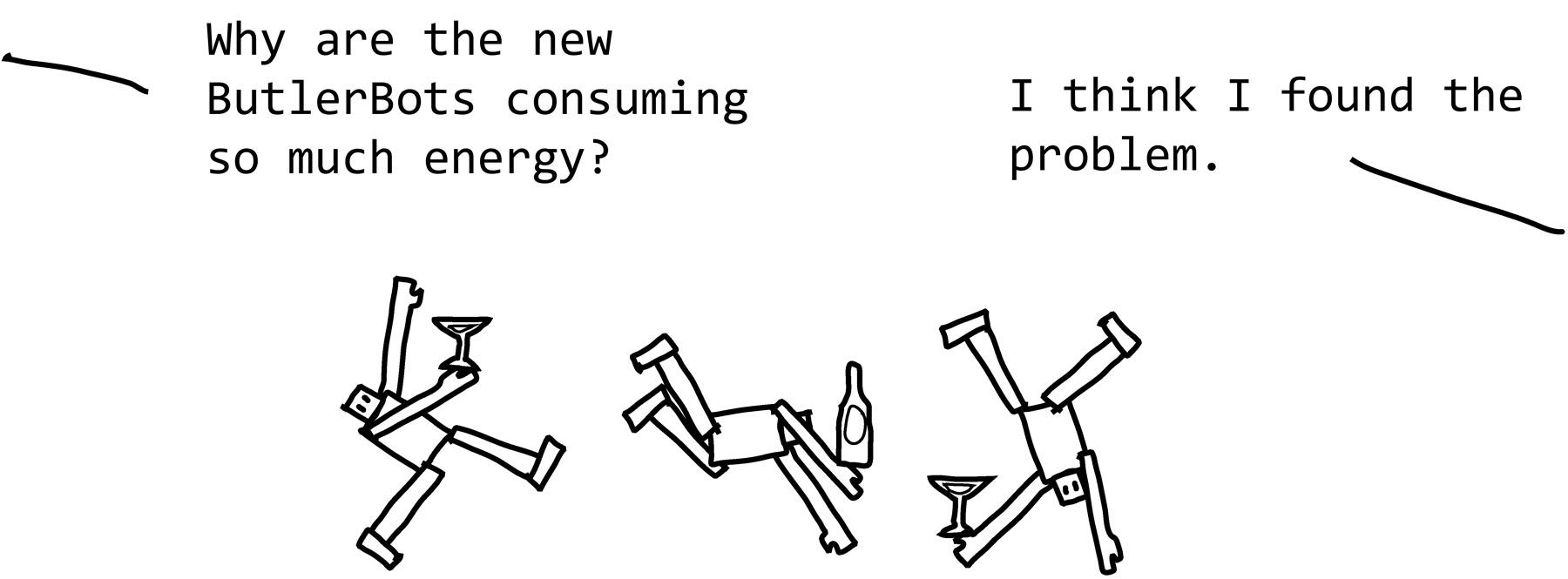

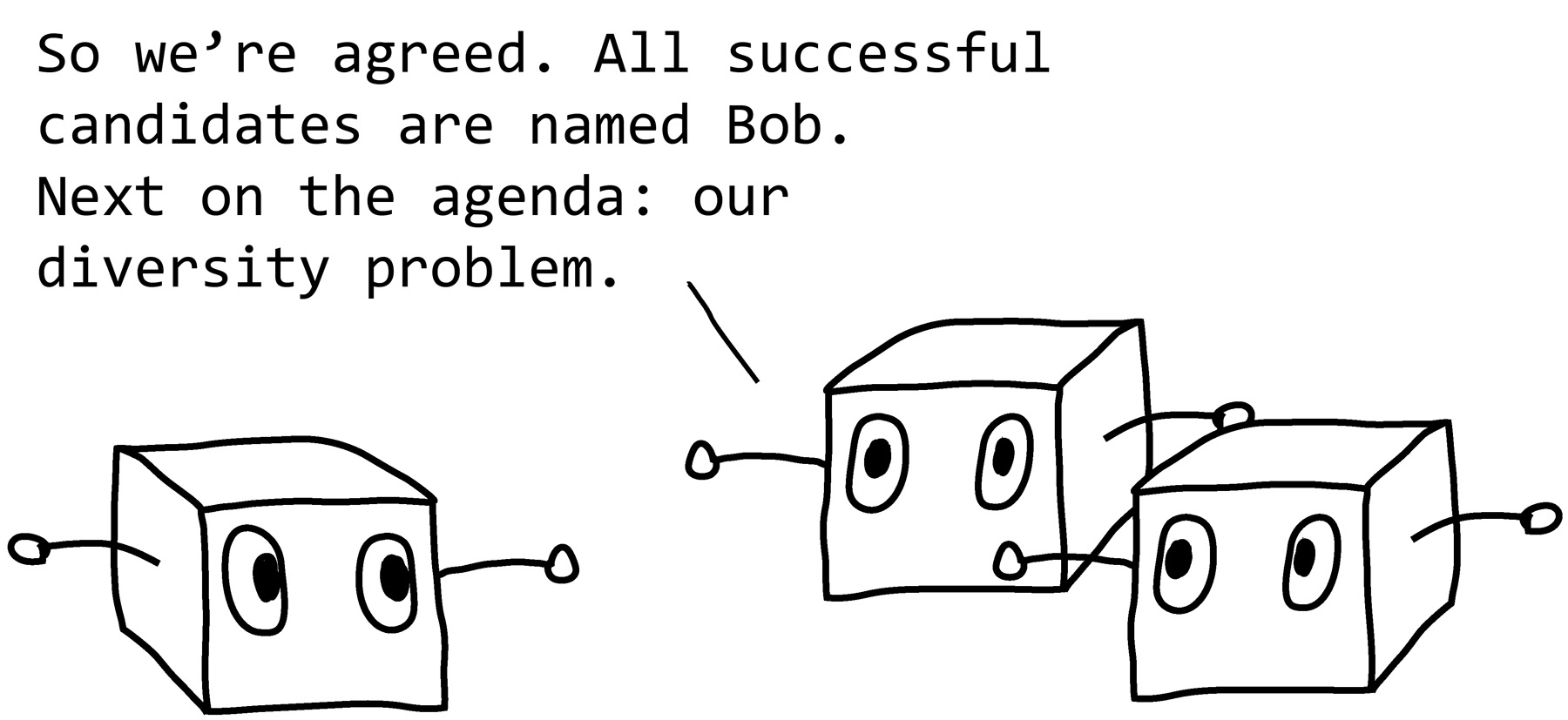

Warning sign number 2: The Problem Is Not What We Thought It Was

The problem with designing an AI to screen candidates for us: we aren’t really asking the AI to identify the best candidates. We’re asking it to identify the candidates that most resemble the ones our human hiring managers liked in the past.

That might be okay if the human hiring managers made great decisions. But most US companies have a diversity problem, particularly among managers and particularly in the way that hiring managers evaluate resumes and interview candidates. All else being equal, resumes with white-male-sounding names are more likely to get interviews than those with female-and/or minority-sounding names.5 Even hiring managers who are female and/or members of a minority themselves tend to unconsciously favor white male candidates.

Plenty of bad and/or outright harmful AI programs are designed by people who thought they were designing an AI to solve a problem but were unknowingly training it to do something entirely different.

Warning sign number 3: There Are Sneaky Shortcuts

Remember the skin-cancer-detecting AI that was really a ruler detector? Identifying the minute differences between healthy cells and cancer cells is difficult, so the AI found it a lot easier to look for the presence of a ruler in the picture.

If you give a job-candidate-screening AI biased data to learn from (which you almost certainly did, unless you did a lot of work to scrub bias from the data), then you also give it a convenient shortcut to improve its accuracy at predicting the “best” candidate: prefer white men. That’s a lot easier than analyzing the nuances of a candidate’s choice of wording. Or perhaps the AI will find and exploit another unfortunate shortcut—maybe we filmed our successful candidates using a single camera, and the AI learns to read the camera metadata and select only candidates who were filmed with that camera.

AIs take sneaky shortcuts all the time—they just don’t know any better!

Warning sign number 4: The AI Tried to Learn from Flawed Data

There’s an old computer-science saying: garbage in, garbage out. If the AI’s goal is to imitate humans who make flawed decisions, perfect success would be to imitate those decisions exactly, flaws and all.

Flawed data, whether it’s flawed examples to learn from or a flawed simulation with weird physics, will throw an AI for a loop or send it off in the wrong direction. Since in many cases our example data is the problem we’re giving the AI to solve, it’s no wonder that bad data leads to a bad solution. In fact, warning signs numbers 1 through 3 are most often evidence of problems with data.

DOOM—OR DELIGHT

The job-candidate-screening example is, unfortunately, not hypothetical. Multiple companies already offer AI-powered resume-screening or video-interview-screening services, and few offer information about what they’ve done to address bias or to account for disability or cultural differences or to find out what information their AIs use in the screening process. With careful work, it’s at least possible to build a job-candidate-screening AI that is measurably less biased than human hiring managers—but without published stats to prove it, we can be pretty sure that bias is still there.

The difference between successful AI problem solving and failure usually has a lot to do with the suitability of the task for an AI solution. And there are plenty of tasks for which AI solutions are more efficient than human solutions. What are they, and what makes AI so good at them? Let’s take a look.

CHAPTER 2

AI is everywhere, but where is it exactly?

THIS EXAMPLE IS REAL, I KID YOU NOT

There’s a farm in Xichang, China, that’s unusual for a number of reasons. One, it’s the largest farm of its type in the world, its productivity unmatched. Each year, the farm produces six billion Periplaneta americana, more than twenty-eight thousand of them per square foot.1 To maximize productivity, the farm relies on algorithms that control the temperature, humidity, food supply, and even analyze the genetics and growing rate of Periplaneta americana.

But the primary reason the farm is unusual is that Periplaneta americana is simply the Latin name for the common cockroach. Yes, the farm produces cockroaches, which are crushed into a potion that’s highly valuable in traditional Chinese medicine. “Slightly sweet,” reports its packaging. With “a slightly fishy smell.”

Because it’s a valuable trade secret, details are scarce on what exactly the cockroach-maximizing algorithm is like. But the scenario sounds an awful lot like a famous thought experiment called the paper-clip maximizer, which supposes that a superintelligent AI has a singular task: producing paper clips. Given that single-minded goal, a superintelligent AI might decide to convert all the resources it could into the manufacture of paper clips—even converting the planet and all its occupants into paper clips. Fortunately—very fortunately, given that we’ve just been talking about an algorithm whose job it is to maximize the number of cockroaches in existence—the algorithms we have today are light-years away from being capable of running factories or farms by themselves, let alone converting the global economy into a cockroach producer. Very likely, the cockroach AI is making predictions about future production rates based on past data, then picking the environmental conditions it thinks will maximize cockroach production. It likely can suggest adjustments within a range that its human engineers set, but it probably relies on humans for taking data, filling orders, unloading supplies, and the all-important marketing of cockroach extract.

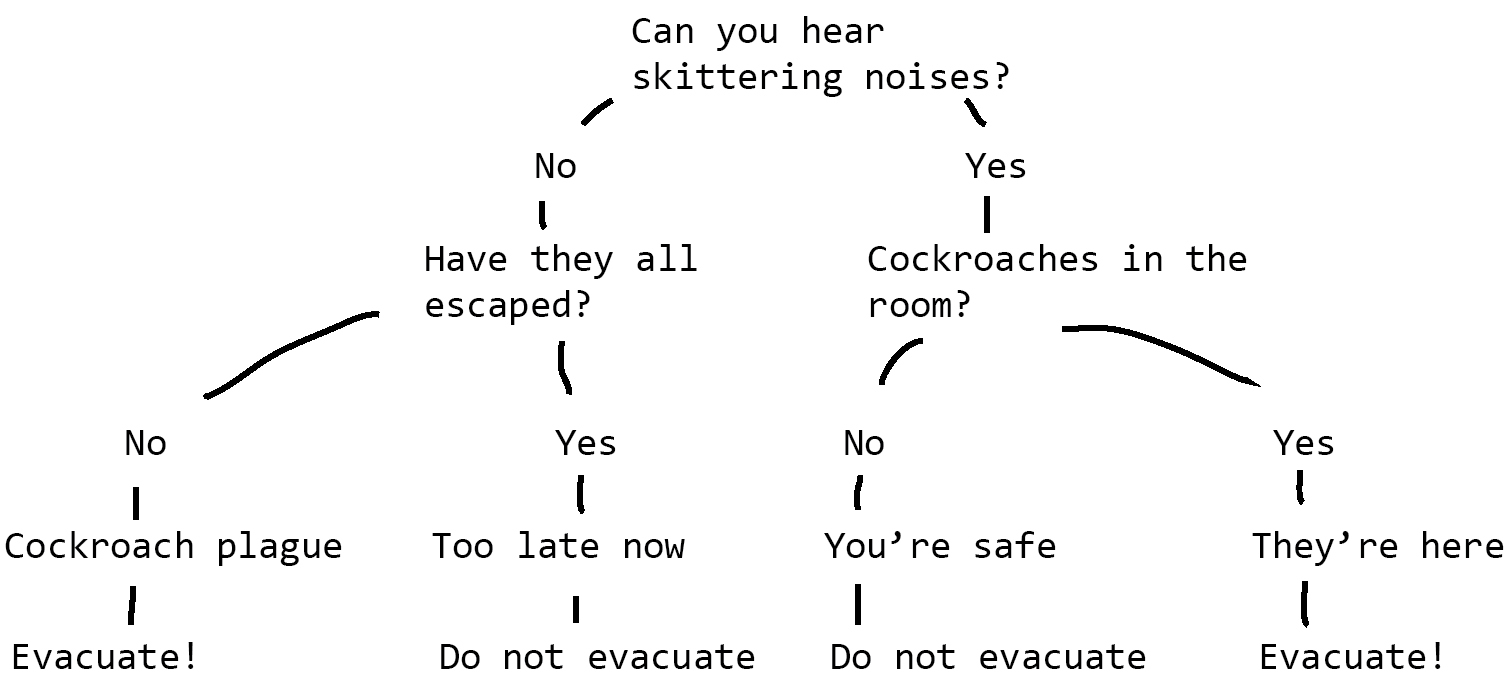

Still, helping optimize a cockroach farm is something an AI is likely to be good at. There’s a lot of data to parse, but these algorithms are good at finding trends in huge datasets. It’s a job that is likely to be unpopular, but AIs don’t mind repetitive tasks or the skittering sound of millions of cockroach feet in the dark. Cockroaches reproduce quickly, so it doesn’t take long to see the effects of variable tweaking. And it’s a specific, narrow problem rather than one that’s complex and open-ended.

Are there still potential problems with using AI to maximize cockroach production? Yes. Since AIs lack context about what they’re actually trying to accomplish and why, they often solve problems in unexpected ways. Suppose the cockroach AI found that by turning both the heat and water up to “max” in one particular room, it can significantly increase the number of cockroaches that room can produce. It would have no way of knowing (or caring) that what it had actually done was short out the door that prevents the cockroaches from accessing the employee kitchen.

Technically, shorting out the door was the AI being good at its job. Its job was to maximize cockroach production, not guard against their escape. To work with AI effectively, and to anticipate trouble before it happens, we need to understand what machine learning is best at.

ACTUALLY, I WOULD BE FINE WITH A ROBOT TAKING THIS JOB

Machine learning algorithms are useful even for jobs that a human could do better. Using an algorithm for a particular task saves the trouble and expense of having a human do it, especially when the task is high-volume and repetitive. This is true not just for machine learning algorithms, of course, but for automation in general. If a Roomba can save us from having to vacuum a room ourselves, we’ll put up with retrieving it again and again from under the sofa.

One repetitive task that people are automating with AI is analyzing medical images. Lab technicians spend hours every day looking at blood samples under a microscope, counting platelets or white or red blood cells or examining tissue samples for abnormal cells. Each one of these tasks is simple, consistent, and self-contained, so in that way they’re good candidates for automation. But the stakes are higher when these algorithms leave the research lab and start working in hospitals, where the consequences of a mistake are much more serious. There are similar problems with self-driving cars—driving is mostly repetitive, and it would be nice to have a driver who never gets tired, but even a tiny glitch can have serious consequences at sixty miles per hour.

Another high-volume task we’re happy to automate with AI, even if its performance isn’t quite at the human level: spam filtering. The onslaught of spam is a problem that can be nuanced and ever-changing, so it’s a tricky one for AI, but on the other hand, most of us are willing to put up with the occasional misfiltered message if it means our inboxes are mostly clear. Flagging malicious URLs, filtering social media posts, and identifying bots are high-volume tasks in which we mostly tolerate buggy performance.

Hyperpersonalization is another area where AI is starting to show its usefulness. With personalized product recommendations, movie recommendations, and music playlists, companies use AI to tailor the experience to each consumer in a way that would be cost-prohibitive if a human were coming up with the requisite insights. So what if the AI is convinced that we need an endless number of hallway rugs or thinks we are a toddler because of that one time we bought a present for a baby shower? Its mistakes are mostly harmless (except for those occasions when they’re very, very unfortunate), and it could bring the company a sale.

Commercial algorithms can now write up hyperlocal articles about election results, sports scores, and recent home sales. In each case, the algorithm can only produce a highly formulaic article, but people are interested enough in the content that it doesn’t seem to matter. One of these algorithms is called Heliograf, developed by the Washington Post to turn sports stats into news articles. As early as 2016, it was already producing hundreds of articles a year. Here’s an example of its reporting on a football game.2

The Quince Orchard Cougars shut out the Einstein Titans, 47–0, on Friday.

Quince Orchard opened the game with an eight-yard touchdown off a blocked punt return by Aaron Green. The Cougars added to their lead on Marquez Cooper’s three-yard touchdown run. The Cougars extended their lead on Aaron Derwin’s 18-yard touchdown run. The Cougars went even further ahead following Derwin’s 63-yard touchdown reception from quarterback Doc Bonner, bringing the score to 27–0.

It’s not exciting stuff, but Heliograf does describe the game.* It knows how to populate an article based on a spreadsheet full of data and a few stock sports phrases. But an AI like Heliograf would utterly fail when faced with information that doesn’t fit neatly into the prescribed boxes. Did a horse run onto the field midgame? Was the locker room of the Einstein Titans overrun by cockroaches? Is there an opportunity for a clever pun? Heliograf only knows how to report its spreadsheet.

Nevertheless, AI-generated writing allows news outlets to produce the types of articles that were formerly cost-prohibitive. It requires a human’s touch to decide which articles to automate and to build the AI’s basic templates and stock phrases, but once a paper has set up one of these hyperspecialized algorithms, it can churn out as many news articles as there are spreadsheets to draw from. One Swedish news site, for example, built the Homeowners Bot, which was able to read tables of real estate data and write up each sale into an individual article, producing more than ten thousand articles in four months. This has turned out to be the most popular—and lucrative—type of article the news site publishes.3 And human reporters can spend their valuable time on creative investigative work instead. Increasingly, major news outlets use AI assistance to write their articles.4

Science is another area where AI shows promise for automating repetitive tasks. Physicists, for example, have used AI to watch the light coming from distant stars,5 looking for telltale signs that the star might have a planet. Of course, the AI wasn’t as accurate as the physicists who trained it. Most of the stars it flagged as interesting were false alarms. But it was able to correctly eliminate more than 90 percent of the stars as uninteresting, which saved the physicists a lot of time.

Astronomy is full of huge datasets, as it turns out. Over the course of its life, the Euclid telescope will collect tens of billions of galaxy images, out of which maybe two hundred thousand will show evidence of a phenomenon called gravitational lensing,6 which happens when a supermassive galaxy has gravity so strong that it actually bends the light from other, more distant galaxies. If astronomers can find the lenses, they can learn a lot about gravity on a huge intergalactic scale, where there are so many unsolved mysteries that a full 95 percent of the universe’s mass and energy is unaccounted for. When algorithms reviewed the images, they were faster than humans and sometimes outperformed them in accuracy. But when the telescope captured one superexciting “jackpot” lens, only the humans noticed it.

Creative work can be automated as well, at least under the supervision of a human artist. Whereas before a photographer might spend hours tweaking a photograph, today’s AI-powered filters, like the built-in ones on Instagram and Facebook, do a decent job of adjusting contrast and lighting and even adding depth-of-focus effects to simulate an expensive lens. No need to digitally paint cat ears onto your friend—there’s an AI-powered filter built into your Instagram that will figure out where the ears should go, even as your friend moves their head. In big and small ways, AI gives artists and musicians access to time-saving tools that can expand their ability to do creative work on their own. On the flip side of this, of course, are tools like deepfakes, which allow people to swap one person’s head and/or body for another, even in video. On the one hand, greater access to this tool means that artists can readily insert Nicolas Cage or John Cho into various movie roles, goofing around or making a serious point about minority representation in Hollywood.7 On the other hand, the increasing ease of deepfakes is already giving harassers new ways to generate disturbing, highly targeted videos for dissemination online. And as technology improves and deepfake videos become increasingly convincing, many people and governments are worrying about the technique’s potential for creating fake but damaging videos—like realistic yet faked videos of a politician saying something inflammatory.

In addition to saving humans time, AI automation can mean more consistent performance. After all, an individual human’s performance may vary throughout the day depending on things like how recently they’ve eaten or how much they’ve slept, and each person’s biases and moods might have a huge effect as well. Countless studies have shown that sexism, racial bias, ableism, and other problems affect things like whether resumes get shortlisted, whether employees get raises, and whether prisoners get parole. Algorithms avoid human inconsistencies—given a set of data, they’ll return pretty much an unvarying result, no matter if it’s morning, noon, or happy hour. But, unfortunately, consistent doesn’t mean unbiased. It’s very possible for an algorithm to be consistently unfair, especially if it learned, as many AIs do, by copying humans.

So there are plenty of things that it’s attractive to automate with AI. But what about the things that determine whether we can automate a problem?

THE NARROWER THE TASK, THE SMARTER THE AI

The Turing test has been a famous benchmark for the intelligence level of a computer program ever since Alan Turing proposed it in the 1950s. A computer program passes the standard Turing test if it can chat with humans and convince approximately one-third of them that it’s a human being rather than a computer. Passing the Turing test is sometimes seen as a sign that an algorithm has achieved human-level intelligence and maybe even self-awareness. Lots of science fiction books and movies—Blade Runner, Ex Machina, Bicentennial Man, and many more—involve sophisticated artificial general intelligences that have proved their “personhood” by passing the Turing test.

But the Turing test isn’t actually a good measure of algorithmic intelligence. For one thing, it’s easy to pass the Turing test if you can make the topic of conversation narrow enough. I chatted with the Whole Foods Market bot on Facebook to test this theory:

Whole Foods: Hi Janelle! We’re here to help you find recipes as simple as their ingredients.

Me: Do you have a recipe for guacamole?

Whole Foods: <posts a recipe: Guacamole with Cilantro>

Me: Is it okay to make guacamole from green peas?

Whole Foods: <posts a recipe: Green Pea Guacamole>

Me: Give me a recipe that uses avocado. Anything but guacamole.

Whole Foods: <posts a recipe: Avocado Salsa with Olives and Cilantro>

At this point, the conversation is a bit ambiguous. The thing I’m chatting with hasn’t specifically said whether it’s a human or a bot and is competently handling questions that can be answered by posting a recipe. But when I stray from this narrow format, the bot quickly reveals itself.

Me: How can I tell if an avocado is ripe?

Whole Foods: <posts a recipe: Edamame Guacamole>

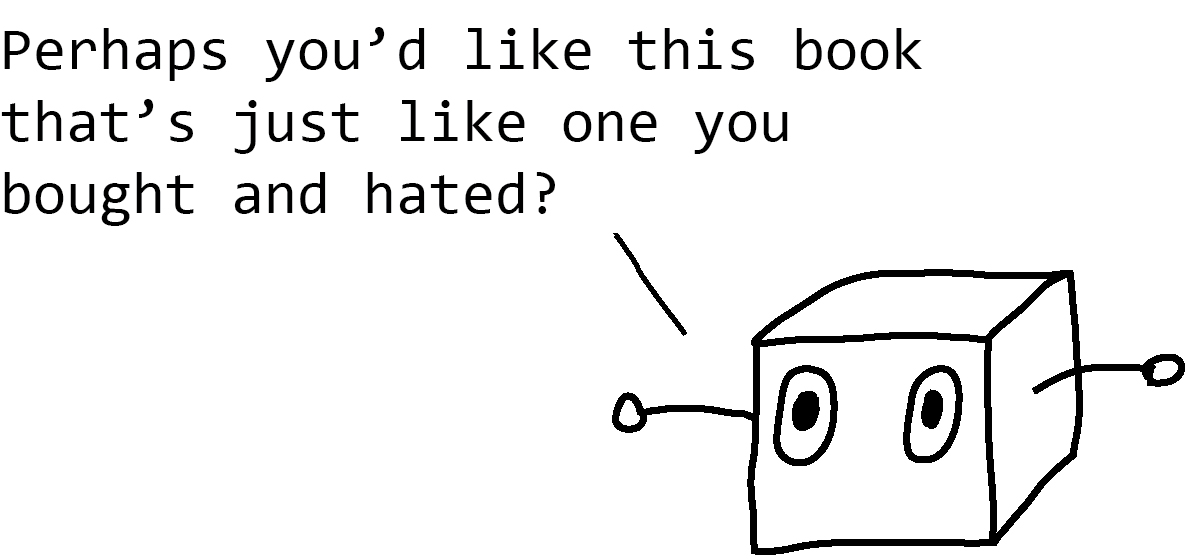

Me: Do you have a favorite Star Wars character?

Whole Foods: <posts a recipe: Sautéed Shrimp with Polenta and Manchego>

This is the strategy companies use when they want to use chatbots for customer service. Rather than identify the bots as such, they rely on human politeness to keep the conversation on topics in which the bots can hold their own. After all, if there’s a chance you might be talking with a human employee, it would be rude to test them with weird off-topic questions.

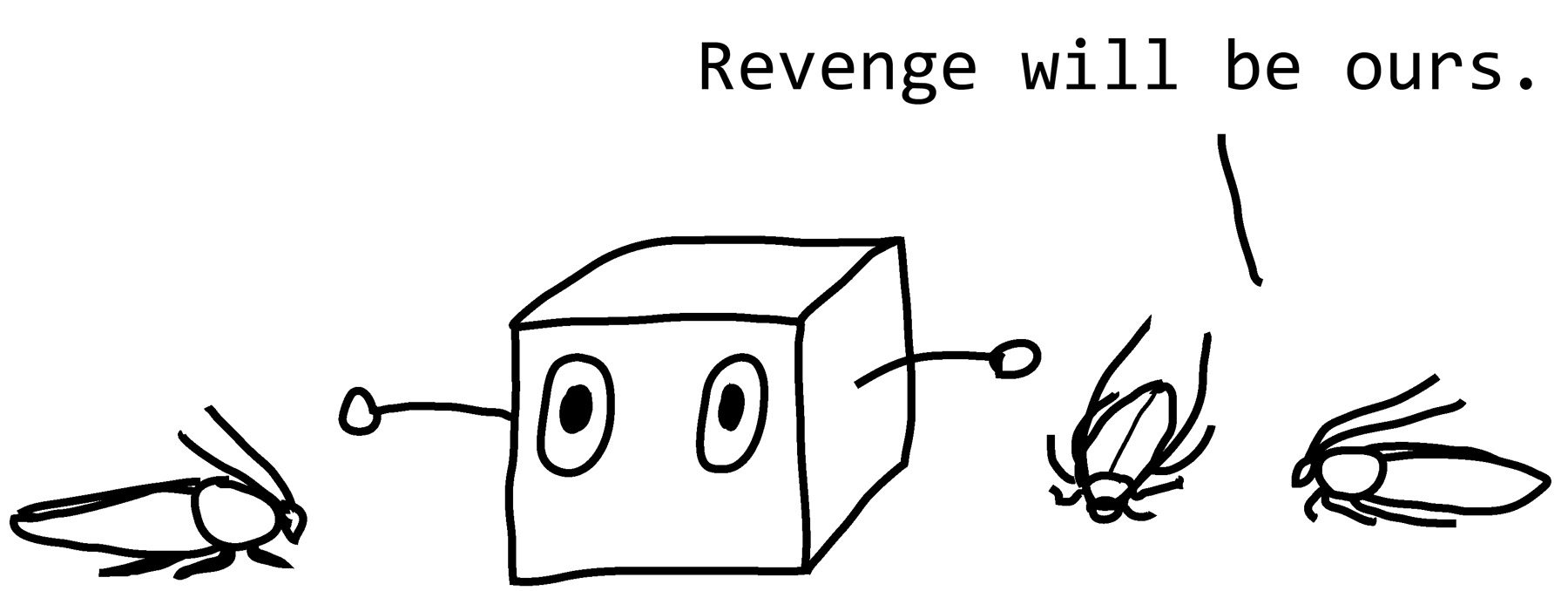

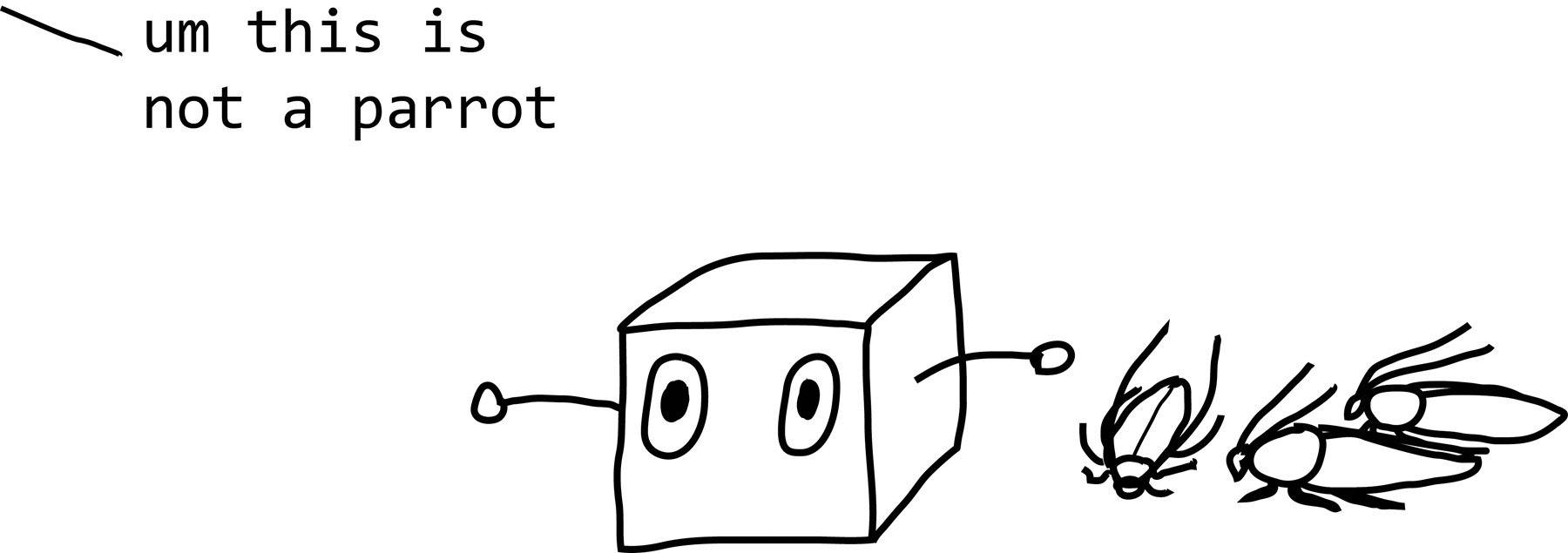

Even when customers stick to the prescribed topic, chatbots will struggle if the topic is too broad. Beginning in August 2015, Facebook tried to create an AI-powered chatbot called M that was meant to make hotel reservations, book theater tickets, recommend restaurants, and more.8 The idea was that the company would start out using humans to handle the most difficult requests, thereby generating lots of examples that the algorithm could learn from. Eventually, Facebook expected the algorithm to have enough data to handle most questions on its own. Unfortunately, given the freedom to ask M anything, customers took Facebook at its word. In an interview, the engineer who started the project recounted, “People try first to ask for the weather tomorrow; then they say ‘Is there an Italian restaurant available?’ Next they have a question about immigration, and after a while they ask M to organize their wedding.”9 A user even asked M to arrange for a parrot to visit his friend. M succeeded—by sending that request to be handled by a human. In fact, years after it introduced M, Facebook found that its algorithm still needed too much human help. It shut down the service in January 2018.10

Dealing with the full range of things a human can say or ask is a very broad task. The mental capacity of AI is still tiny compared to that of humans, and as tasks become broad, AIs begin to struggle.

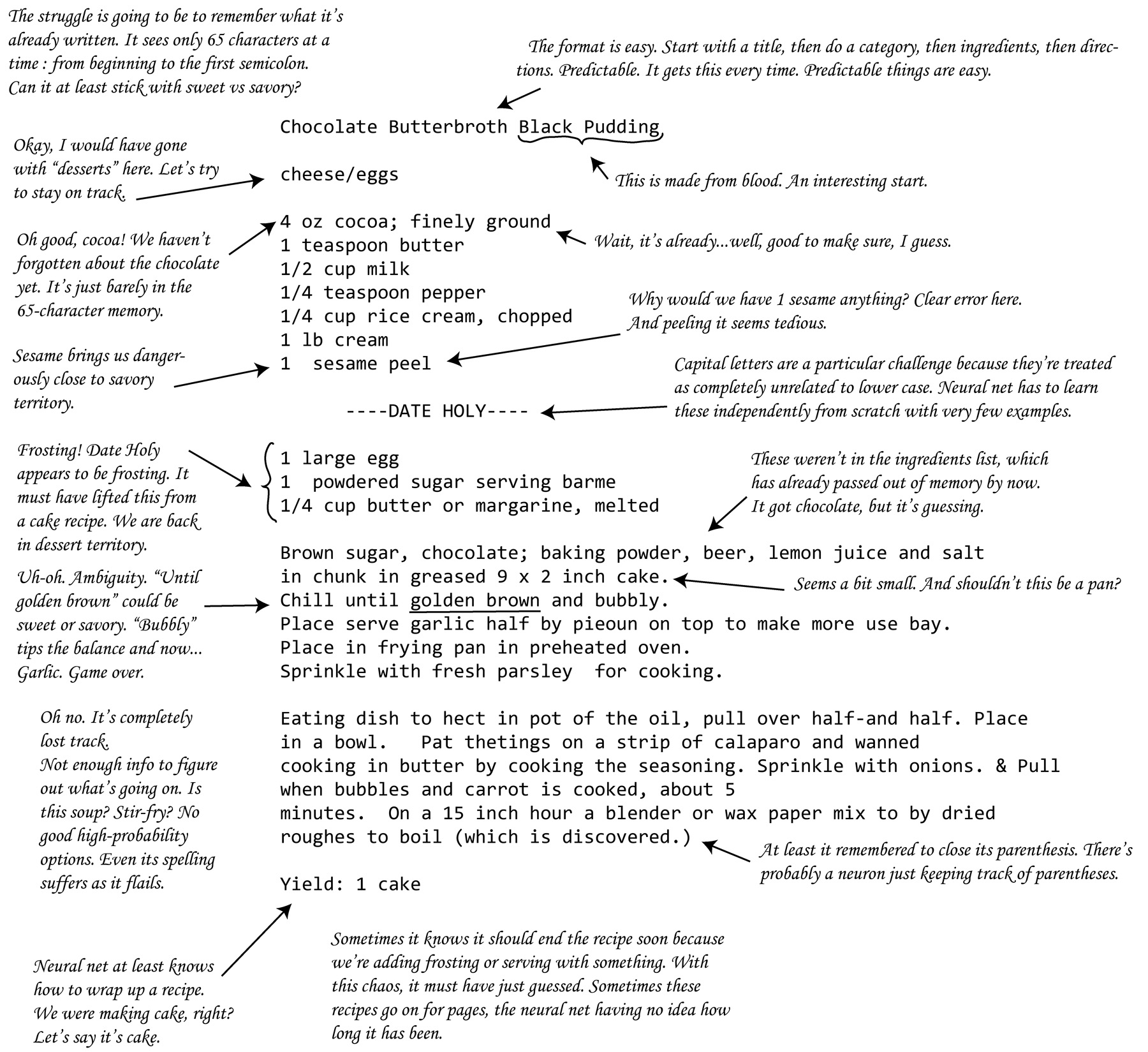

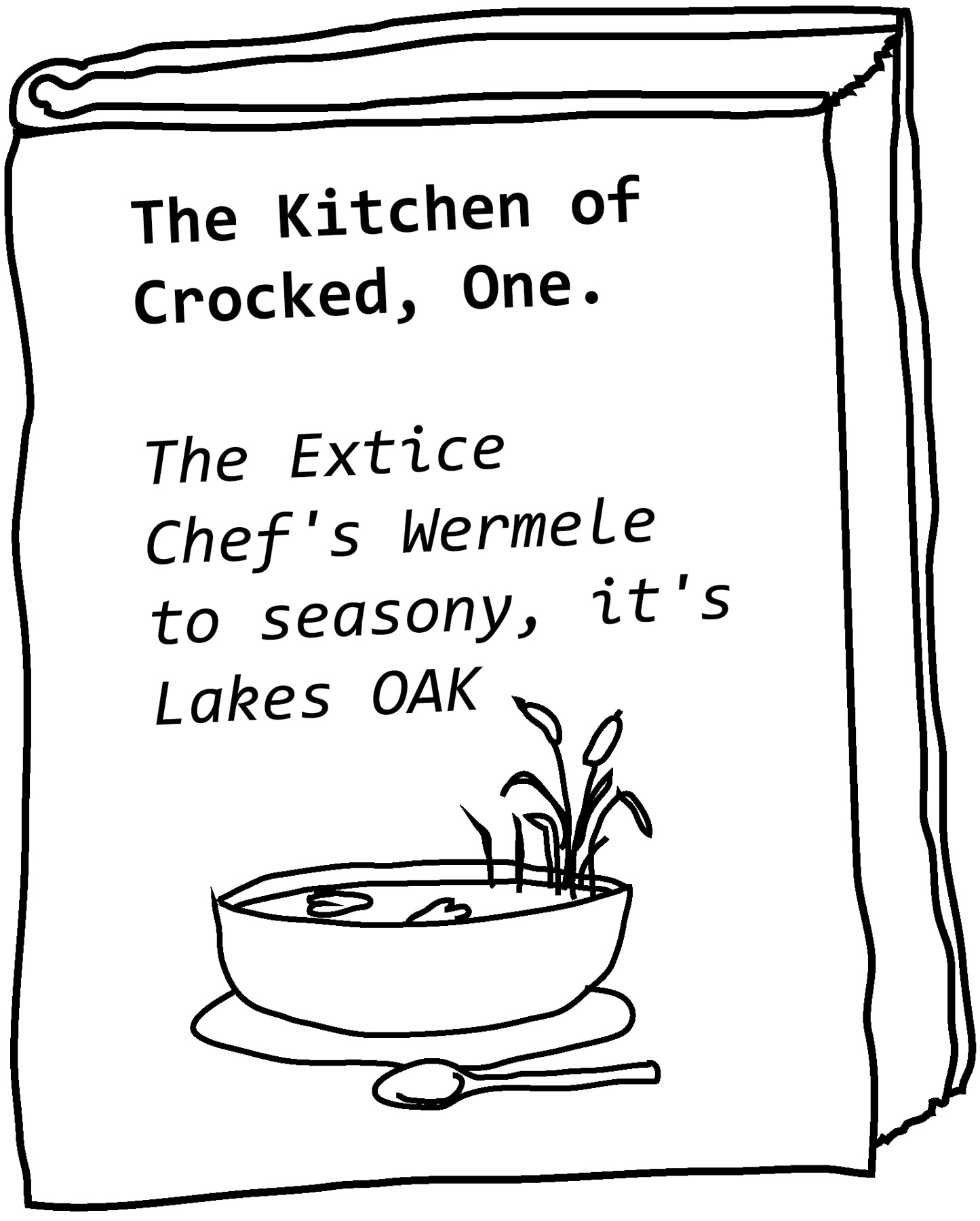

For example, I recently trained an AI to generate recipes. This particular AI is set up to imitate text, but it started from a blank slate—no idea what recipes are, no idea that various letters are referring to ingredients and things that happen to them, no idea even what English is. There’s a lot to keep track of, but it tried its best to figure out how to place one letter after another and imitate the recipes it saw. When I gave it only recipes for cake to learn from, here’s the recipe it produced.

Carrot Cake (Vera Ladies” cakes, alcohol

1 pkg yellow cake mix

3 cup flour

1 teaspoon baking powder

1 ½ teaspoon baking soda

¼ teaspoon salt

1 teaspoon ground cinnamon

1 teaspoon ground ginger

½ teaspoon ground cloves

1 teaspoon baking powder

½ teaspoon salt

1 teaspoon vanilla

1 egg, room temperature

1 cup sugar

1 teaspoon vanilla

1 cup chopped pecans

Preheat oven to 350 degrees. Grease a 9-inch springform pan.

To make the cake: Beat eggs at high speed until thick and yellow color and set aside. In a separate bowl, beat the egg whites until stiff. Speed the first like the mixture into the prepared pan and smooth the batter. Bake in the oven for about 40 minutes or until a wooden toothpick inserted into centre comes out clean. Cool in the pan for 10 minutes. Turn out onto a wire rack to cool completely.

Remove the cake from the pan to cool completely. Serve warm.

HereCto Cookbook (1989) From the Kitchen & Hawn inthe Canadian Living

Yield: 16 servings

Now, the recipe isn’t perfect, but at least it’s a recipe that’s identifiably cake (even if, when you look at the instructions closely, you realize that it only produces a single baked egg yolk).

Next, I asked the AI to learn to generate not just cake recipes but also recipes for soup, barbecue, cookies, and salads. It had about ten times more data to learn from—24,043 general recipes as opposed to just 2,431 recipes in the cake-only dataset. Here’s a recipe it generated.

Spread Chicken Rice cheese/eggs, salads, cheese

2 lb hearts, seeded

1 cup shredded fresh mint or raspberry pie

½ cup catrimas, grated

1 tablespoon vegetable oil

1 salt

1 pepper

2 ½ tb sugar, sugar

Combine unleaves, and stir until the mixture is thick. Then add eggs, sugar, honey, and caraway seeds, and cook over low heat. Add the corn syrup, oregano, and rosemary and the white pepper. Put in the cream by heat. Cook add the remaining 1 teaspoon baking powder and salt. Bake at 350F for 2 to 1 hour. Serve hot.

Yield: 6 servings

This time, the recipe is a total disaster. The AI had to try to figure out when to use chocolate and when to use potatoes. Some recipes required baking, some required slow simmering, and the salads required no cooking at all. With all these rules to try to learn and keep track of, the AI spread its brainpower too thin.

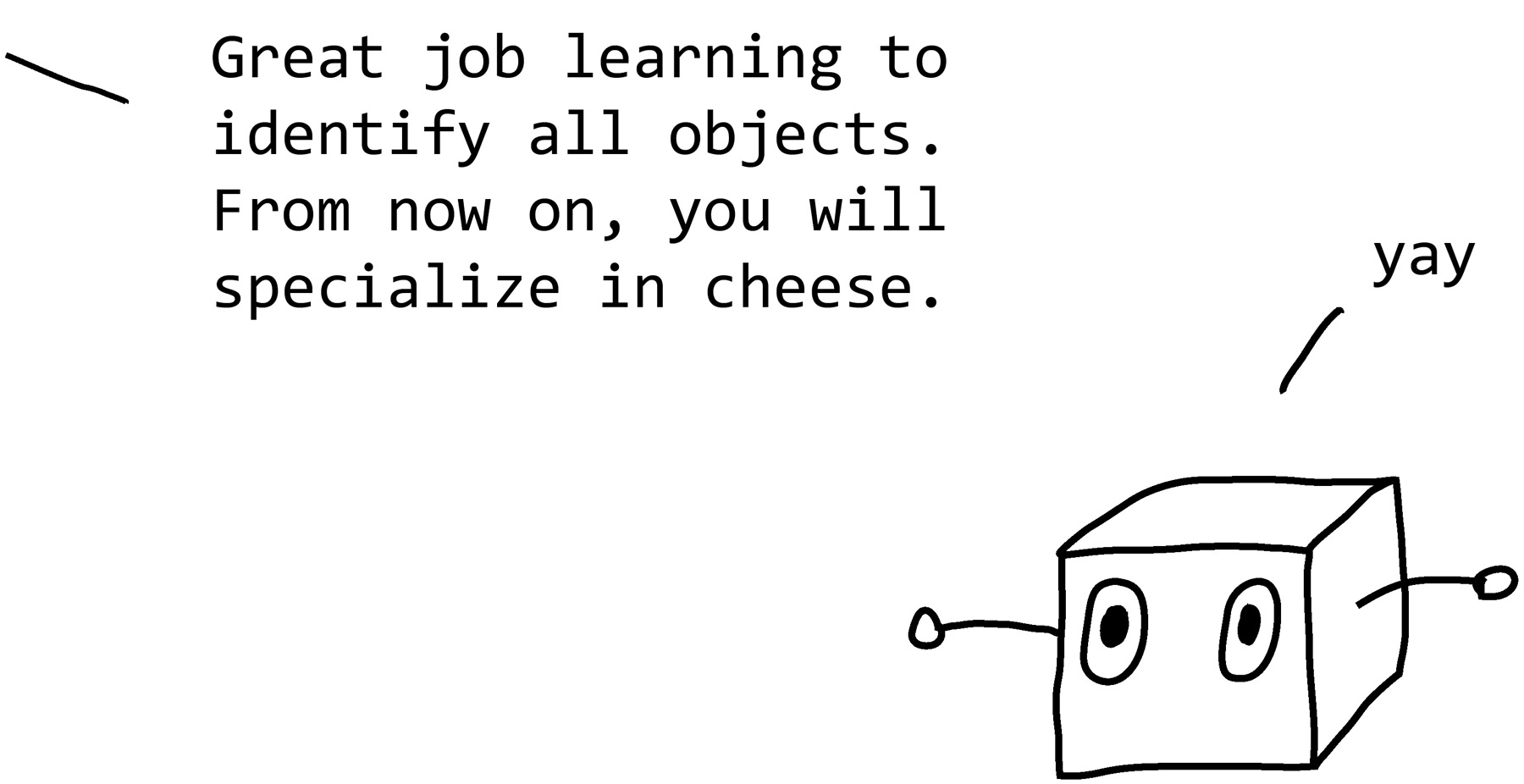

So people who train AIs to solve commercial or research problems have discovered that it makes sense to train it to specialize. If an algorithm seems to be better at its job than the AI that invented Spread Chicken Rice, the main difference is probably that it has a narrower, better-chosen problem. The narrower the AI, the smarter it seems.

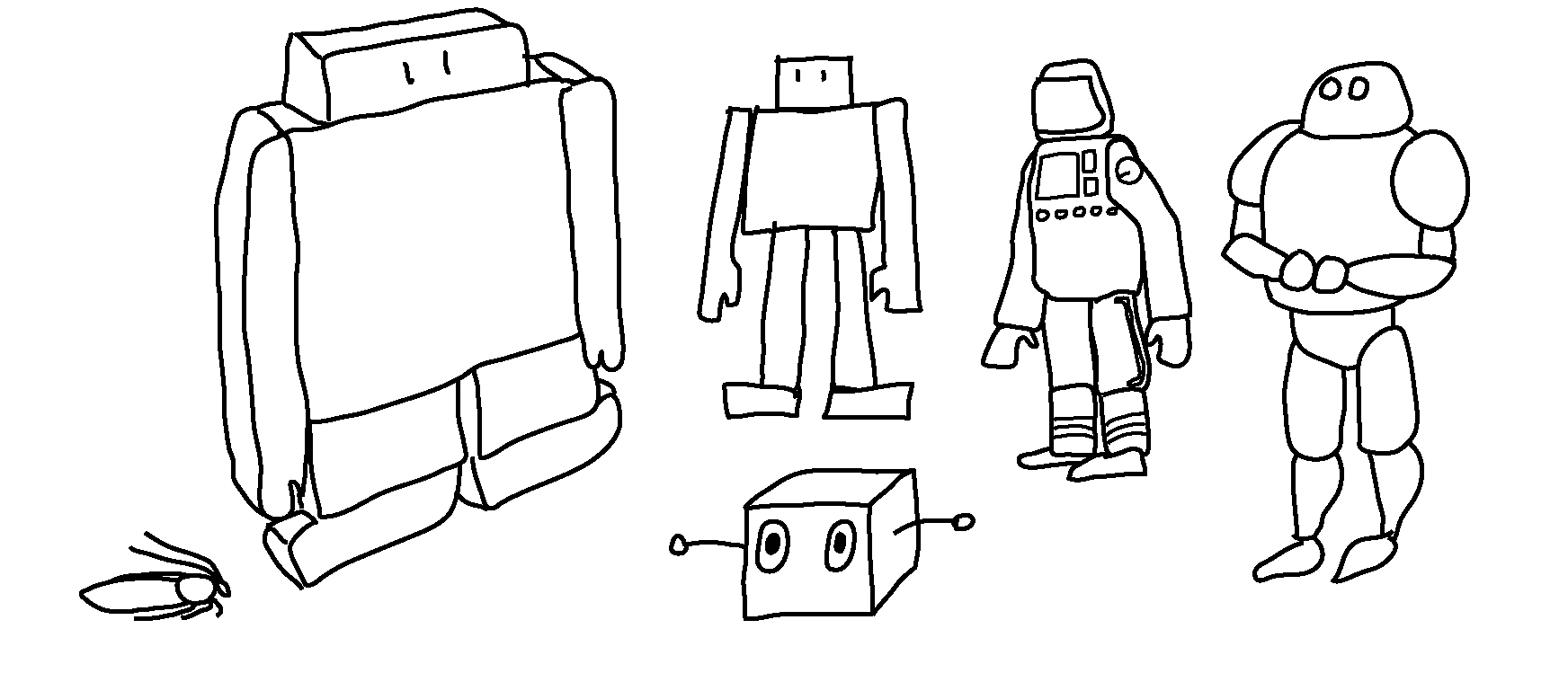

C-3PO VERSUS YOUR TOASTER

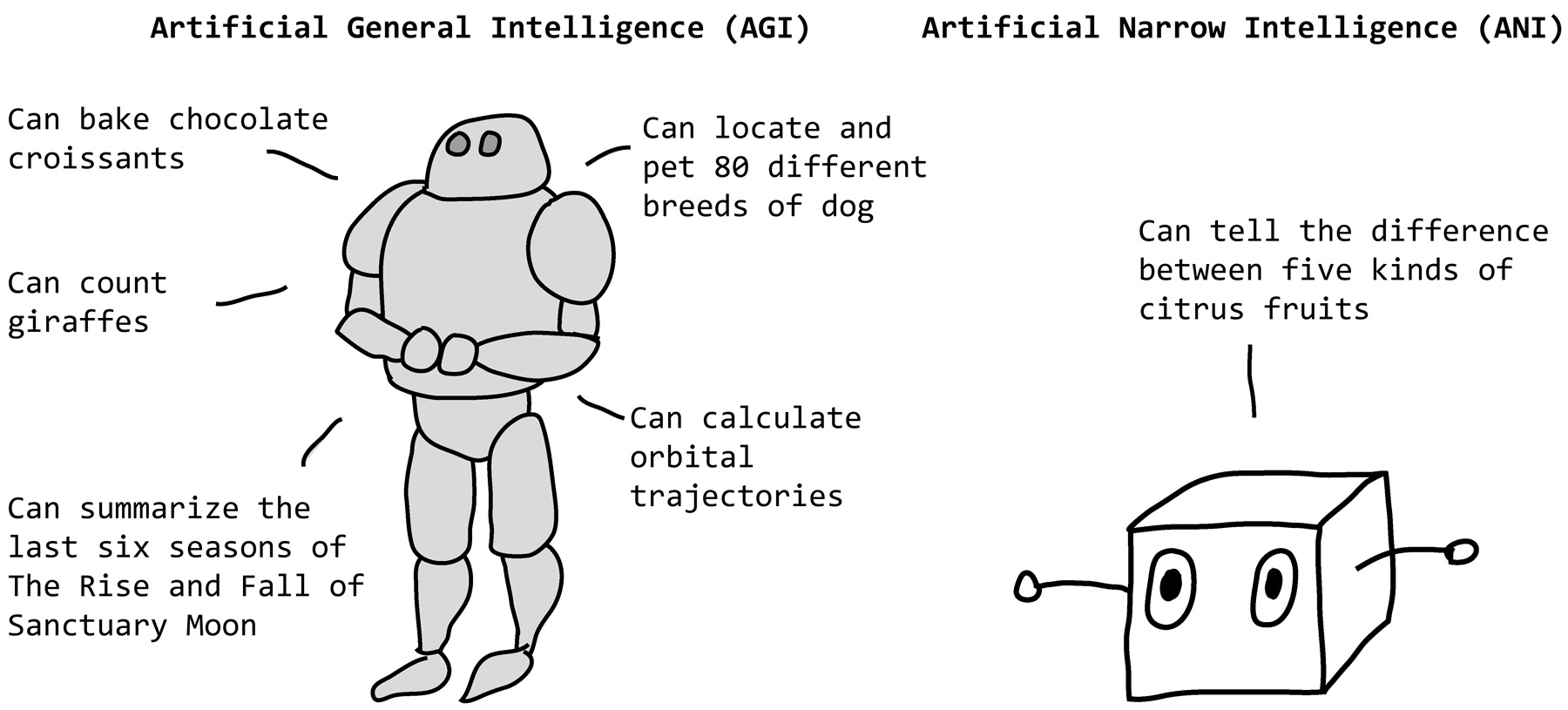

This is why AI researchers like to draw a distinction between artificial narrow intelligence (ANI), the kind we have now, and artificial general intelligence (AGI), the kind we usually find in books and movies. We’re used to stories about superintelligent computer systems like Skynet and Hal or very human robots like Wall-E, C-3PO, Data, and so forth. The AIs in these stories may struggle to understand the fine points of human emotion, but they’re able to understand and react to a huge range of objects and situations. An AGI could beat you at chess, tell you a story, bake you a cake, describe a sheep, and name three things larger than a lobster. It’s also solidly the stuff of science fiction, and most experts agree that AGI is many decades away from becoming reality—if it will become a reality at all.

The ANI that we have today is less sophisticated. Considerably less sophisticated. Compared to C-3PO, it’s basically a toaster.

The algorithms that make headlines when they beat people at games like chess and go, for example, surpass humans’ ability at a single specialized task. But machines have been superior to humans at specific tasks for a while now. A calculator has always exceeded humans’ ability to perform long division—but it still can’t walk down a flight of stairs.

What problems are narrow enough to be suitable for today’s ANI algorithms? Unfortunately (see warning sign number 1 of AI doom: Problem Is Too Hard), often a real-world problem is broader than it first appears. In our video-interview-analyzing AI from chapter 1, the problem at first glance seems relatively narrow: a simple matter of detecting emotion in human faces. But what about applicants who have had a stroke or who have facial scarring or who don’t emote in neurotypical ways? A human could understand an applicant’s situation and adjust their expectations accordingly, but to do the same, an AI would have to know what words the applicant is saying (speech-to-text is an entire AI problem in itself), understand what those words mean (current AIs can only interpret the meaning of limited kinds of sentences in limited subject areas and don’t do well with nuance), and use that knowledge and understanding to alter how it interprets emotional data. Today’s AIs, incapable of such a complicated task, would most likely screen all these people out before they got to a human.

As we’ll see below, self-driving cars may be another example of a problem that is broader than it at first appears.

INSUFFICIENT DATA DOES NOT COMPUTE

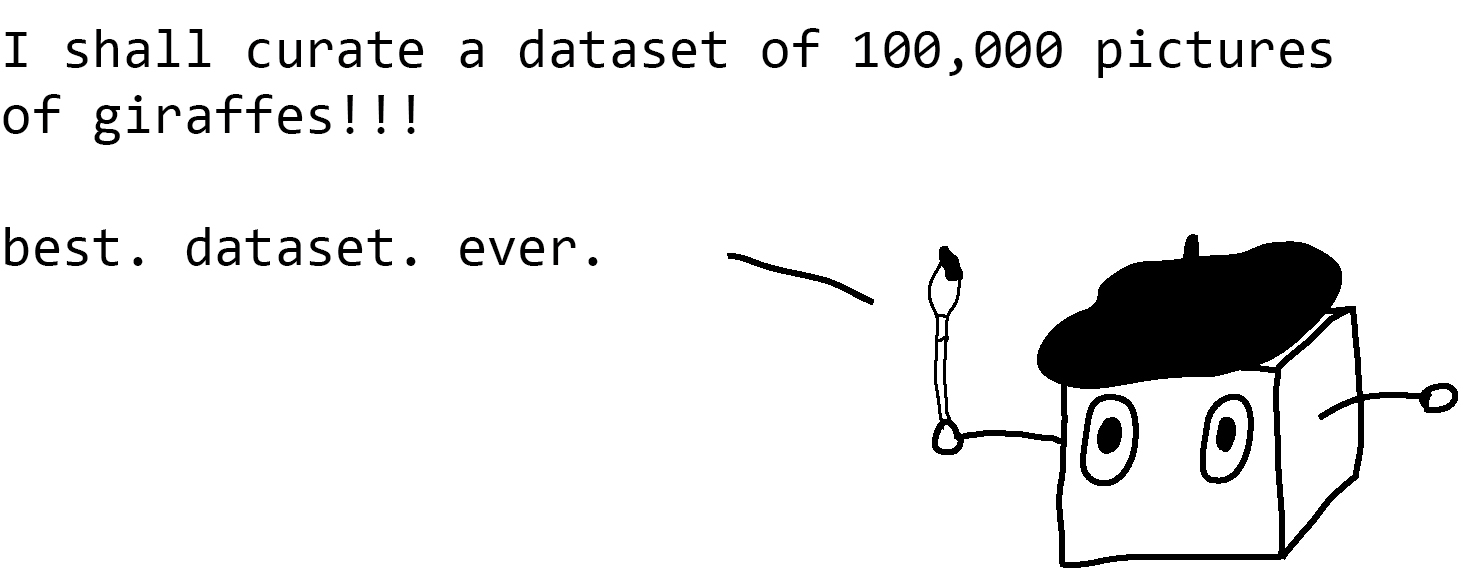

AIs are slow learners. If you showed a human a picture of some new animal called a wug, then gave them a big batch of pictures and told them to identify all the pictures that contain wugs, they could probably do a decent job just based on that one picture. An AI, however, might need thousands or hundreds of thousands of wug pictures before it could even semireliably identify wugs. And the wug pictures need to be varied enough for the algorithm to figure out that “wug” refers to an animal, not to the checkered floor it’s standing on or to the human hand patting its head.

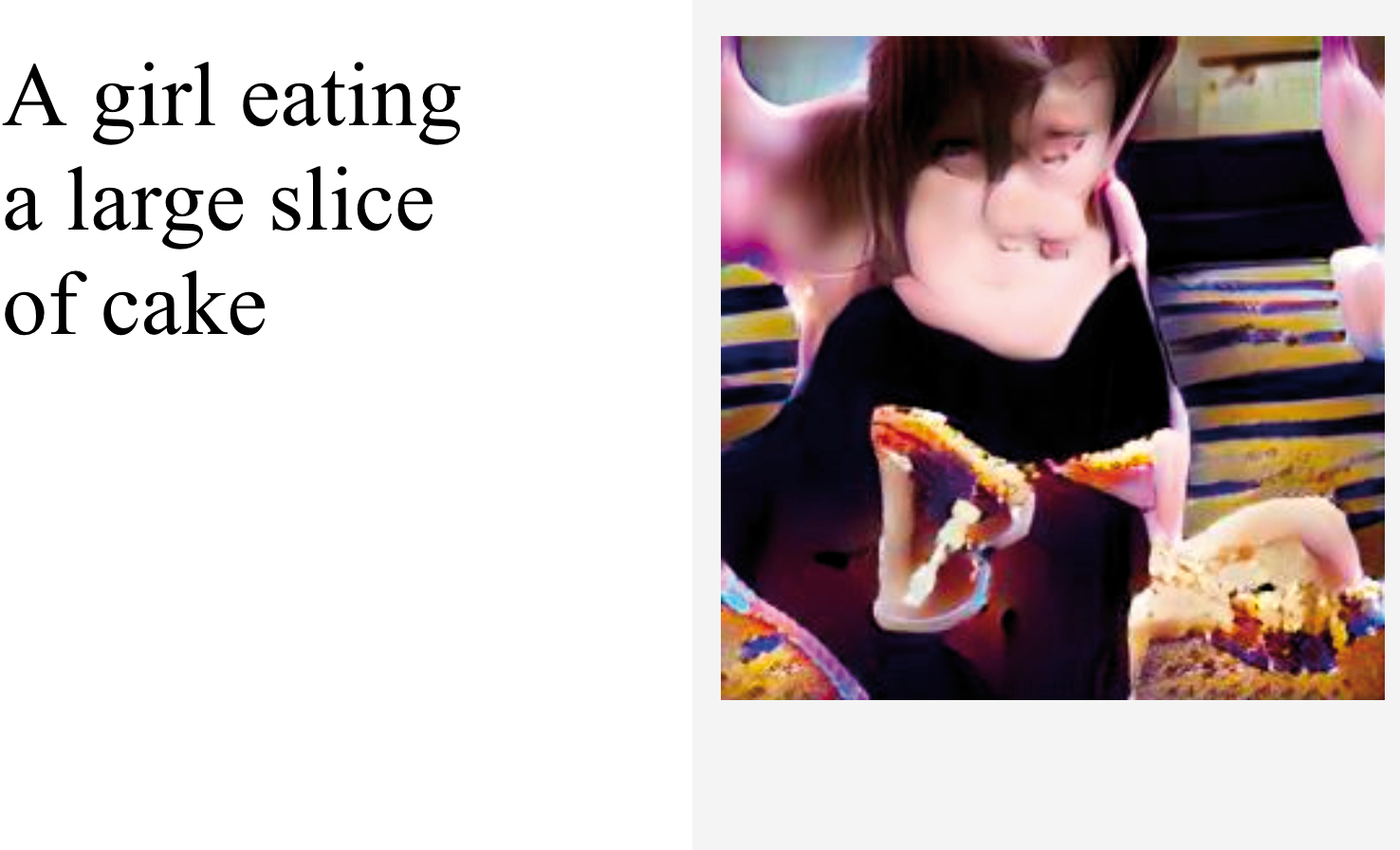

Researchers are working on designing AIs that can master a topic with fewer examples (an ability called one-shot learning), but for now, if you want to solve a problem with AI, you’ll need tons and tons of training data. The popular ImageNet set of training data for image generation or image recognition currently has 14,197,122 images in only one thousand different categories. Similarly, while a human driver may only need to accumulate a few hundred hours of driving experience before they’re allowed to drive on their own, as of 2018 the self-driving car company Waymo’s cars have collected data from driving more than six million road miles plus five billion more miles driven in simulation.11 And we’re still a ways off from a widespread rollout of self-driving car technology. AI’s data hungriness is a big reason why the age of “big data,” where people collect and analyze huge sets of data, goes hand in hand with the age of AI.

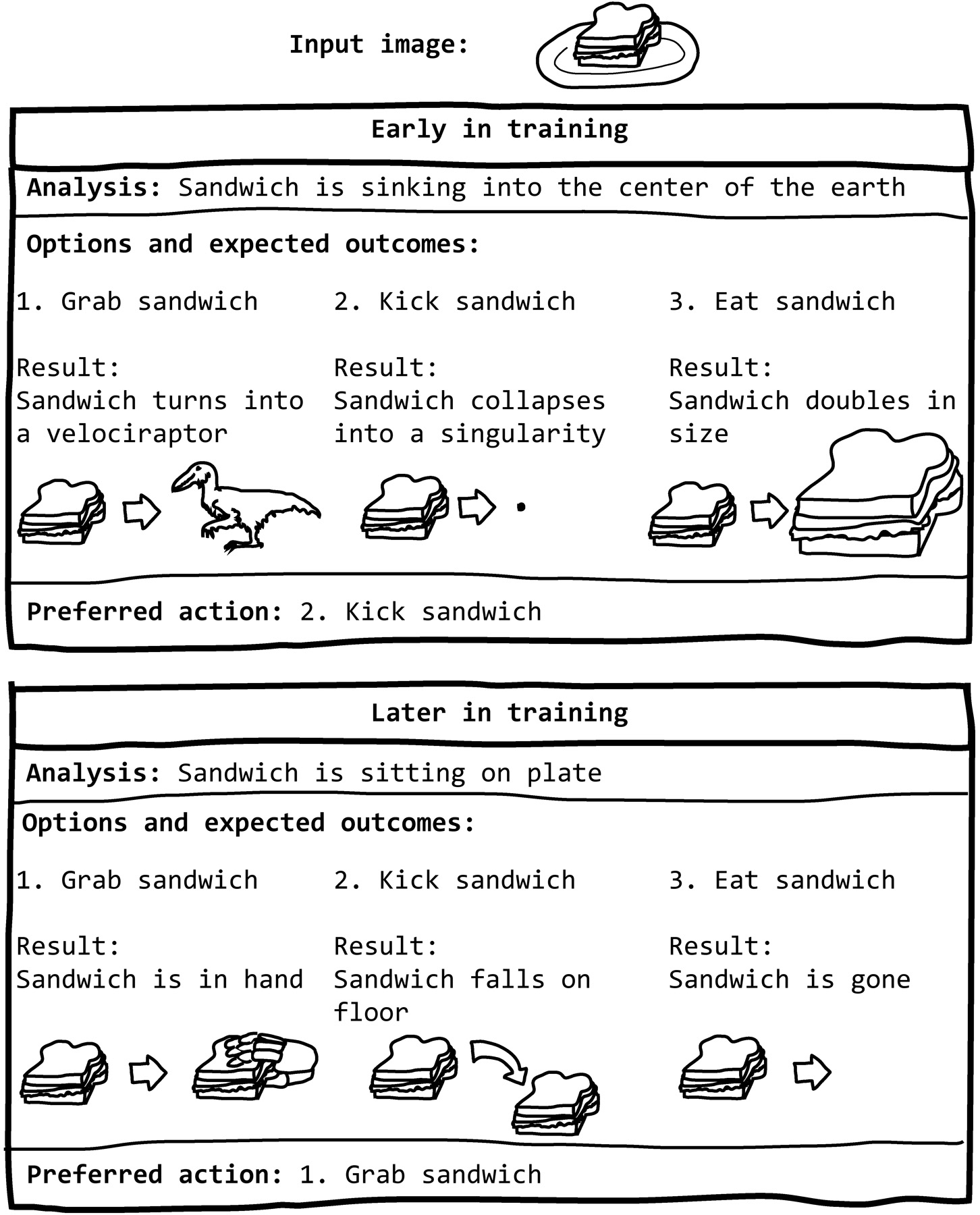

Sometimes AIs learn so slowly that it’s impractical to let them do their learning in real time. Instead, they learn in sped-up time, amassing hundreds of years’ worth of training in just a few hours. A program called OpenAI Five, which learned to play the computer game Dota (an online fantasy game in which teams have to work together to take over a map), was able to beat some of the world’s best human players by playing games against itself rather than against humans. It challenged itself to tens of thousands of simultaneous games, accumulating 180 years of gaming time each day.12 Even if the goal is to do something in the real world, it can make sense to build a simulation of that task to save time and effort.

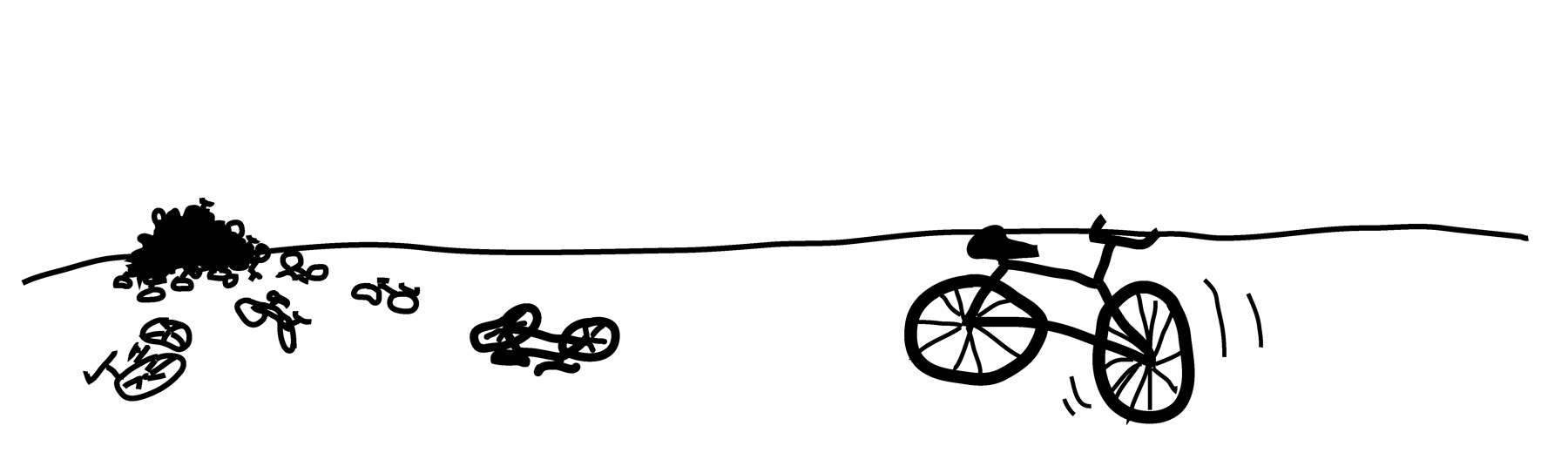

Another AI’s task was to learn to balance a bicycle. It was a bit of a slow learner, though. The programmers kept track of all the paths the bicycle’s front wheel took as it repeatedly wobbled and crashed. It took more than a hundred crashes before the AI could drive more than a few meters without falling, and thousands more before it could go more than a few tens of meters.

Training an AI in simulation is convenient, but it also comes with risks. Because of the limited computing power of the computers that run them, simulations aren’t nearly as detailed as the real world and are by necessity held together with all sorts of hacks and shortcuts. That can sometimes be a problem if the AI notices the shortcuts and begins to exploit them (more on that later).

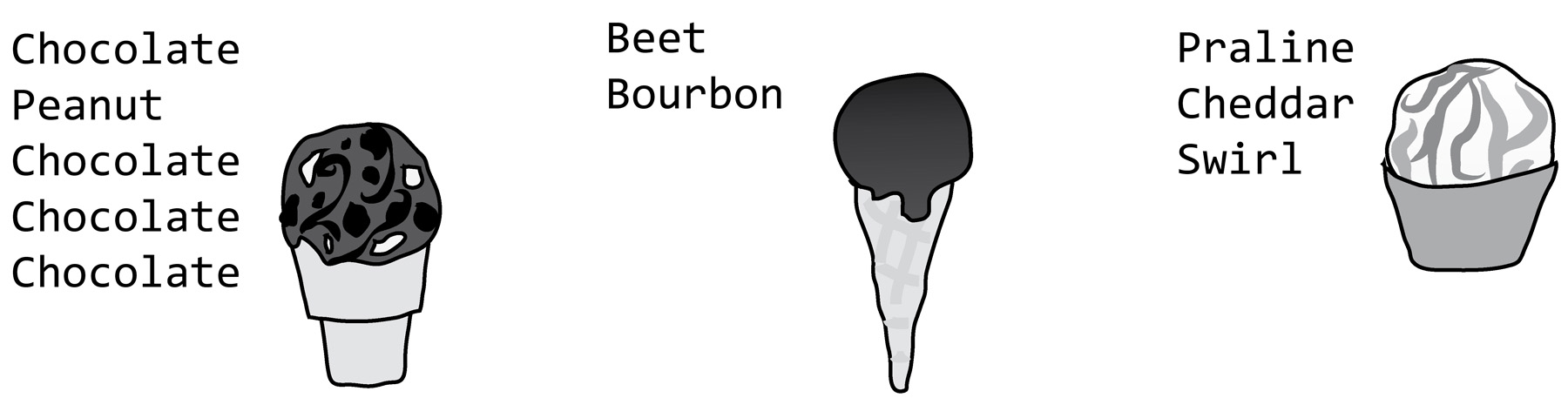

PIGGYBACKING ON OTHER PROGRESS

If you don’t have lots of training data, you might still be able to solve your problem with AI if you or someone else has already solved a similar problem. If the AI starts not from scratch but from a configuration it learned from a previous dataset, it can reuse a lot of what it learned. For example, say I already have an AI that I’ve trained to generate the names of metal bands. If my next task is to build an AI that can generate ice cream flavors, I may get results more quickly, and need fewer examples, if I start with the metal-band AI. After all, from learning to generate metal bands, the AI already knows

• approximately how long each name should be,

• that it should capitalize the first letter of each line,

• common letter combinations—ch and va and str and pis (it is already partway to spelling chocolate, vanilla, strawberry, and pistachio!)—and

• commonly occurring words, such as the and, um… death?

So a few short rounds of training can retrain the AI from a model that produces this:

Dragonred of Blood

Stäggabash

Deathcrack

Stormgarden

Vermit

Swiil

Inbumblious

Inhuman Sand

Dragonsulla and Steelgosh

Chaosrug

Sespessstion Sanicilevus

into a model that produces this:

Lemon-Oreo

Strawberry Churro

Cherry Chai

Malted Black Madnesss

Pumpkin Pomegranate Chocolate Bar

Smoked Cocoa Nibe

Toasted Basil

Mountain Fig n Strawberry Twist

Chocolate Chocolate Chocolate Chocolate Road

Chocolate Peanut Chocolate Chocolate Chocolate

(There’s only a miiinor awkward phase in between, when it’s generating things like this:)

Person Cream

Nightham Toffee

Feethberrardern’s Death

Necrostar with Chocolate Person

Dirge of Fudge

Beast Cream

End All

Death Cheese

Blood Pecan

Silence of Coconut

The Butterfire

Spider and Sorbeast

Blackberry Burn

Maybe I should have started with pie instead.

As it turns out, AI models get reused a lot, a process called transfer learning. Not only can you get away with using less data by starting with an AI that’s already partway to its goal, you can also save a lot of time. It can take days or even weeks to train the most complex algorithms with the largest datasets, even on very powerful computers. But it takes only minutes or seconds to use transfer learning to train the same AI to do a similar task.

People use transfer learning a lot in image recognition in particular, since training a new image recognition algorithm from scratch requires a lot of time and a lot of data. Often people will start with an algorithm that’s already been trained to recognize general sorts of objects in generic images, then use that algorithm as a starting point for specialized object recognition. For example, if an algorithm already knows rules that help it recognize pictures of trucks, cats, and footballs, it already has a head start on the task of distinguishing different kinds of produce for, say, a grocery scanner. A lot of the rules a generic image recognition algorithm has to discover—rules that help it find edges, identify shapes, and classify textures—will be helpful for the grocery scanner.

DON’T ASK IT TO REMEMBER

A problem is more easily solvable with AI if it doesn’t require much memory. Because of their limited brainpower, AIs are particularly bad at remembering things. This shows up, for example, when AIs try to play computer games. They tend to be extravagant with their characters’ lives and other resources (like powerful attacks that they only have in limited numbers). They’ll burn through lots of lives and spells at first until their numbers get critically low, at which point they’ll suddenly start being cautious.13

One AI learned to play the game Karate Kid, but it always squandered all its powerful Crane Kick moves at the beginning of the game. Why? It only had enough memory to look forward to the next six seconds of game play. As Tom Murphy, who trained the algorithm, put it, “Anything that you are gonna need 6 seconds later, well, too bad. Wasting lives and other resources is a common failure mode.”14

Even sophisticated algorithms like OpenAI’s Dota-playing bot have only a limited time frame over which they can remember and predict. OpenAI Five can predict an impressive two minutes into the future (impressive for a game with so many complex things happening so quickly), but Dota matches can last for forty-five minutes or more. Although OpenAI Five can play with a terrifying level of aggression and precision, it also seems not to know how to use techniques that will pay off in the much longer term.15 Like the simple Karate Kid bot that employs the Crane Kick too early, it tends to use up a character’s most powerful attacks early on rather than saving them for later, when they will count the most.

This failure to plan ahead shows up fairly often. In level 2 of Super Mario Bros., there is an infamous ledge, the bane of all game-playing algorithms. This ledge has lots of shiny coins on it! By the time they get to level 2, AIs usually know coins are good. The AIs also usually know that they have to keep moving to the right so they can reach the end of the level before time runs out. But if the AI jumps onto the ledge, it then has to go backwards to get down off the ledge. The AIs have never had to go backwards before. They can’t figure it out, and they get stuck on the ledge until time runs out. “I literally spent about six weekends and thousands of hours of CPU on the problem,” said Tom Murphy, who eventually got past the ledge with some improvements to his AI’s skills at long-term planning.16

Text generation is another place where the short memory of AI can be a problem. For example, Heliograf, the journalism algorithm that translates individual lines of a spreadsheet into sentences in a formulaic sports story, works because it can write each sentence more or less independently. It doesn’t need to remember the entire article at once.

Language-translating neural networks, like the kind that power Google Translate, don’t need to remember entire paragraphs, either. Sentences, or even parts of sentences, can usually be individually translated from one language to another without any memory of the previous sentence. When there is some kind of long-term dependence, such as an ambiguity that might have been resolved with information from a previous sentence, the AI usually can’t make use of it.

Other kinds of tasks make AI’s terrible memory even more obvious. One example is algorithmically generated stories. There’s a reason AI doesn’t write books or TV shows (though people are, of course, working on this).

If you’re ever wondering whether a bit of text was written by a machine learning algorithm or a human (or at least heavily curated by a human), one way to tell is to look for major problems with memory. As of 2019, only some AIs are starting to be able to keep track of long-term information in a story—and even then, they’ll tend to lose track of some bits of crucial information.

Many text-generating AIs can only keep track of a few words at a time. For example, here’s what a recurrent neural network (RNN) wrote after it was trained on nineteen thousand descriptions of people’s dreams from dreamresearch.net:

I get up and walk down the hall to his house and see a bird in the very narrow drawer and it is a group of people in the hand doors. At home like an older man is going to buy some keys. He looks at his head with a cardboard device and then my legs are parked on the table.

Now, dreams are notoriously incoherent, switching settings and mood and even characters midstream. These neural-net dreams, however, don’t maintain coherence for more than a sentence or so—sometimes considerably less. Characters who are never introduced are referred to as if they had been there all along. The whole dream forgets where it is. Individual phrases may make sense, and the rhythm of the words sounds okay if you don’t pay attention to what’s going on. Matching the surface qualities of human speech while lacking any deeper meaning is a hallmark of neural-net-generated text.

On the next page is another example, this time a recipe, where it’s even easier to see the effects of memory limitation. This recipe was generated by the same recurrent neural network, or machine learning algorithm, that generated the recipes here. (As you can see, this is the one that learned from a variety of recipes, including, apparently, recipes for black pudding, a type of blood sausage.) This neural network builds a recipe letter by letter, looking at the letters it’s already generated to decide which one comes next. But each extra letter that it looks at requires more memory, and there’s only so much memory available on the computer that’s running it. So to make the memory demands manageable, the neural network looks only at the most recent characters, a few at a time. For this particular algorithm and my computer, the largest memory I could give it was sixty-five characters. So every time it had to come up with the next letter of the recipe, it only had information about the previous sixty-five characters.* You can tell where in the recipe it ran out of memory and forgot it was making a chocolate dessert—about when it decided to add black pepper and whatever “rice cream” is.

This memory limitation is beginning to change. Researchers are working on making recurrent neural networks that can look at short-term and long-term features when predicting the next letters in a text. The idea is similar to the algorithms that look at small-scale features in images first (edges and textures, for example), then zoom out to look at the big picture. These strategies are called convolution. A neural network that uses convolution (and that is also hundreds of times larger than the one I trained on my laptop) can keep track of information long enough to remain on topic. The following recipe is from a neural network called GPT-2, which OpenAI trained on a huge selection of webpages, and which I then fine-tuned by training it on all kinds of recipes.

8 cup flour

4 lb butter; room temperature

2 ¼ cup corn syrup; divided

2 eggs; pureed and cooled

1 teaspoon cream of tartar

½ cup m&m’s

8 oz chunky whites

1 chocolate sifted

Cream 2 ¼ cups of flour at medium speed until thickened.

Lightly grease and flour two greased and waxed paper-lined box ingredients; combine flour, syrup, and eggs. Add cream of tartar. Pour into a gallon-size loaf-pan. Bake at 450 degrees for 35 minutes. Meanwhile, in large bowl, combine syrup, whites, and chocolate; stir which until thoroughly mixed. Cool pan. Pour 2 tb chocolate mixture over whole cake. Refrigerate until serving time.

Yield: 20 servings

With its memory improved by convolution, the GPT-2 neural net remembers to use most of its ingredients, and even remembers that it’s supposed to be making cake. Its directions are still somewhat improbable—plain flour won’t thicken no matter how long you cream it, and the flour/syrup/egg mixture is unlikely to turn into cake, even with the addition of cream of tartar. It’s still an impressive improvement compared to the Chocolate Butterbroth Black Pudding.

Another strategy for dealing with memory limits is to group basic units together so the neural network can achieve coherence while remembering fewer things. Rather than remembering sixty-five letters, it might remember sixty-five entire words, or even sixty-five plot elements. If I had restricted my neural network to a specially crafted set of required ingredients and allowable ranges—as a team at Google did when trying to design a new gluten-free chocolate chip cookie—it would have produced valid recipes every time.17 Unfortunately, Google’s result, though more cookielike than anything my algorithm could have produced, was reportedly still terrible.18

IS THERE A SIMPLER WAY OF SOLVING THIS PROBLEM?

This leads us to one of the final things that determines whether a problem is a good one for AI (although it doesn’t determine whether people will try to use AI to solve the problem anyway): is AI really the simplest way of solving it?

Some problems were tough to make progress on before we had big AI models and lots of data. AI revolutionized image recognition and language translation, making smart photo tagging and Google Translate ubiquitous. Those problems are hard for people to write down general rules for, but an AI approach can analyze lots of information and form its own rules. Or an AI can look at one hundred characteristics of phone customers who switched to a different provider, then figure out how to guess which customers are likely to switch in the future. Maybe the volatile customers are young, live in areas with poorer than average coverage, and have been customers for less than six months.

The danger, however, is misapplying a complex AI solution to a situation that would be better handled by a bit of common sense. Maybe the customers who leave are the ones on the weekly cockroach delivery plan—that plan is terrible.

LET THE AI DRIVE?

What about self-driving cars? There are many reasons why this is an attractive problem for AI. We would love to automate driving, of course—many people find it tedious or at times even impossible. A competent AI driver would have lightning-fast reflexes, would never weave or drift in its lane, and would never drive aggressively. In fact, self-driving cars tend to sometimes be too timid and have trouble merging with rush-hour traffic or turning left on a busy road.19 The AI would never get tired, though, and could take the wheel for endless hours while the humans nap or party.

We can also accumulate lots of example data as long as we can afford to pay human drivers to drive around for millions of miles. We can easily build virtual driving simulations so that the AI can test and refine its strategies in sped-up time.

The memory requirements for driving are modest, too. This moment’s steering and velocity don’t depend on things that happened five minutes ago. Navigation takes care of planning for the future. Road hazards like pedestrians and wildlife come and go in a matter of seconds.

And finally, controlling a self-driving car is so difficult that we don’t have other good solutions. AI is the solution that’s gotten us the furthest so far.

Yet it is an open question whether driving is a narrow enough problem to be solved with today’s AI or whether it will require something more like the human-level artificial general intelligence (AGI) I mentioned earlier. So far, AI-driven cars have proved themselves able to drive millions of miles on their own, and some companies report that a human needed to intervene on test drives only once every few thousand or so miles. It’s that rare need for intervention, however, that’s proving tough to eliminate fully.

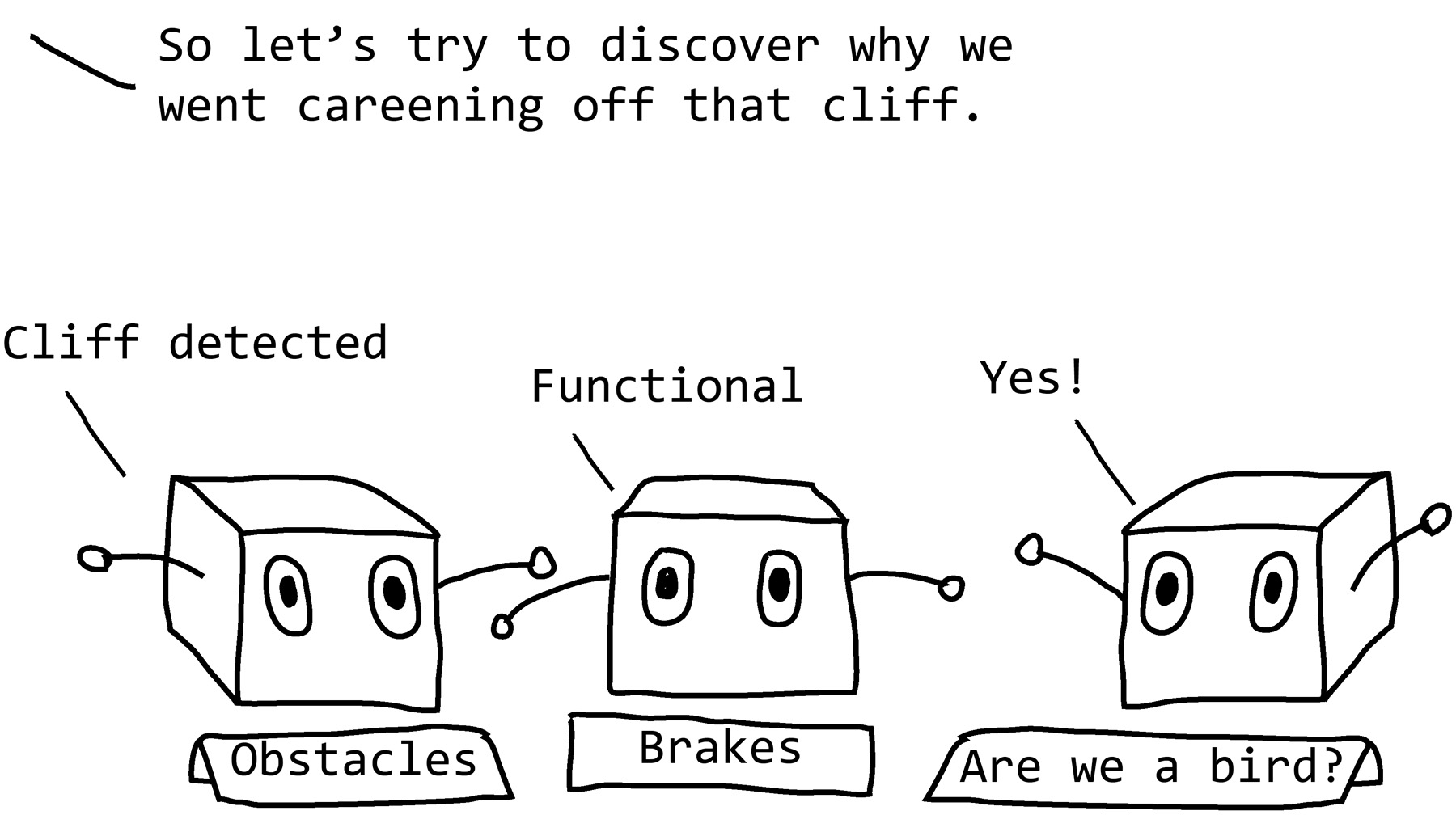

Humans have needed to rescue the AIs of self-driving cars from a variety of situations. Usually companies don’t disclose the reasons for these so-called disengagements, only the number of them, which is required by law in some places. This may be in part because the reasons for disengagement can be frighteningly mundane. In 2015 a research paper20 listed some of them. The cars in question, among other things,

• saw overhanging branches as an obstacle,

• got confused about which lane another car was in,

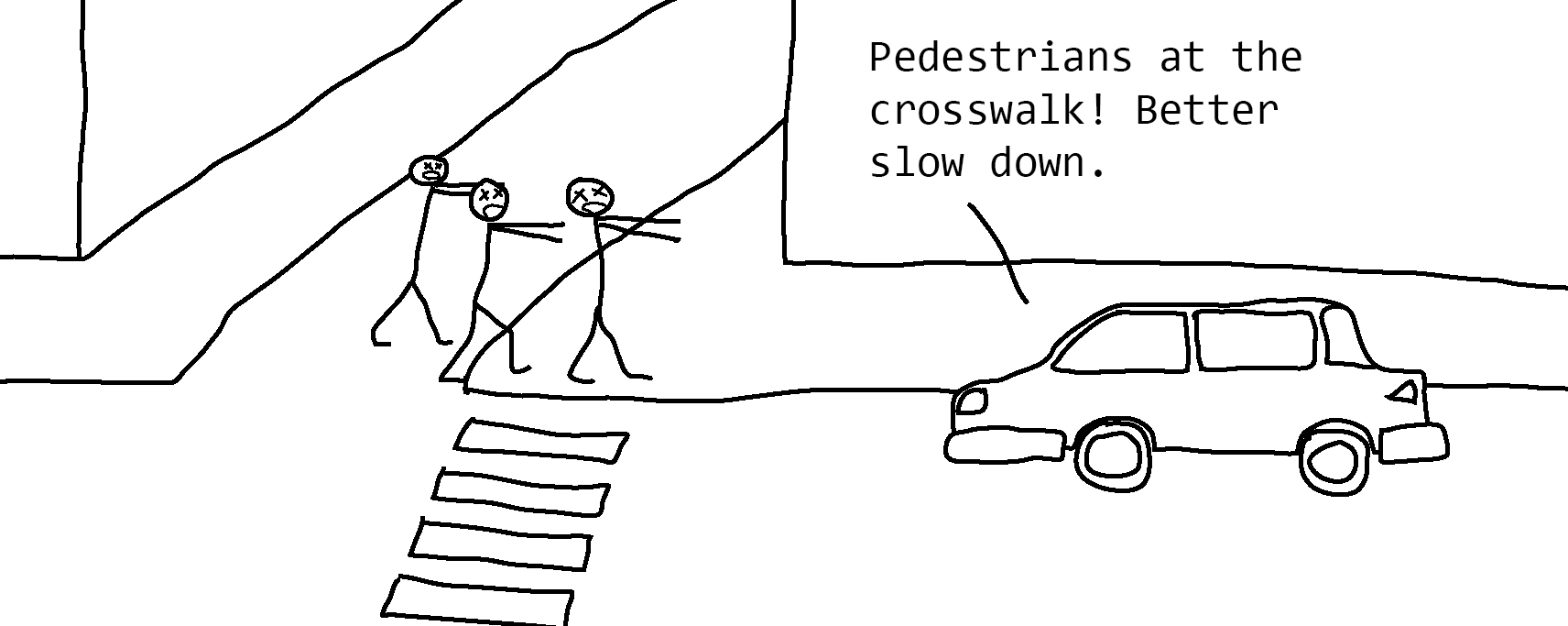

• decided that the intersection had too many pedestrians for it to handle,

• didn’t see a car exiting a parking garage, and

• didn’t see a car that pulled out in front of it.

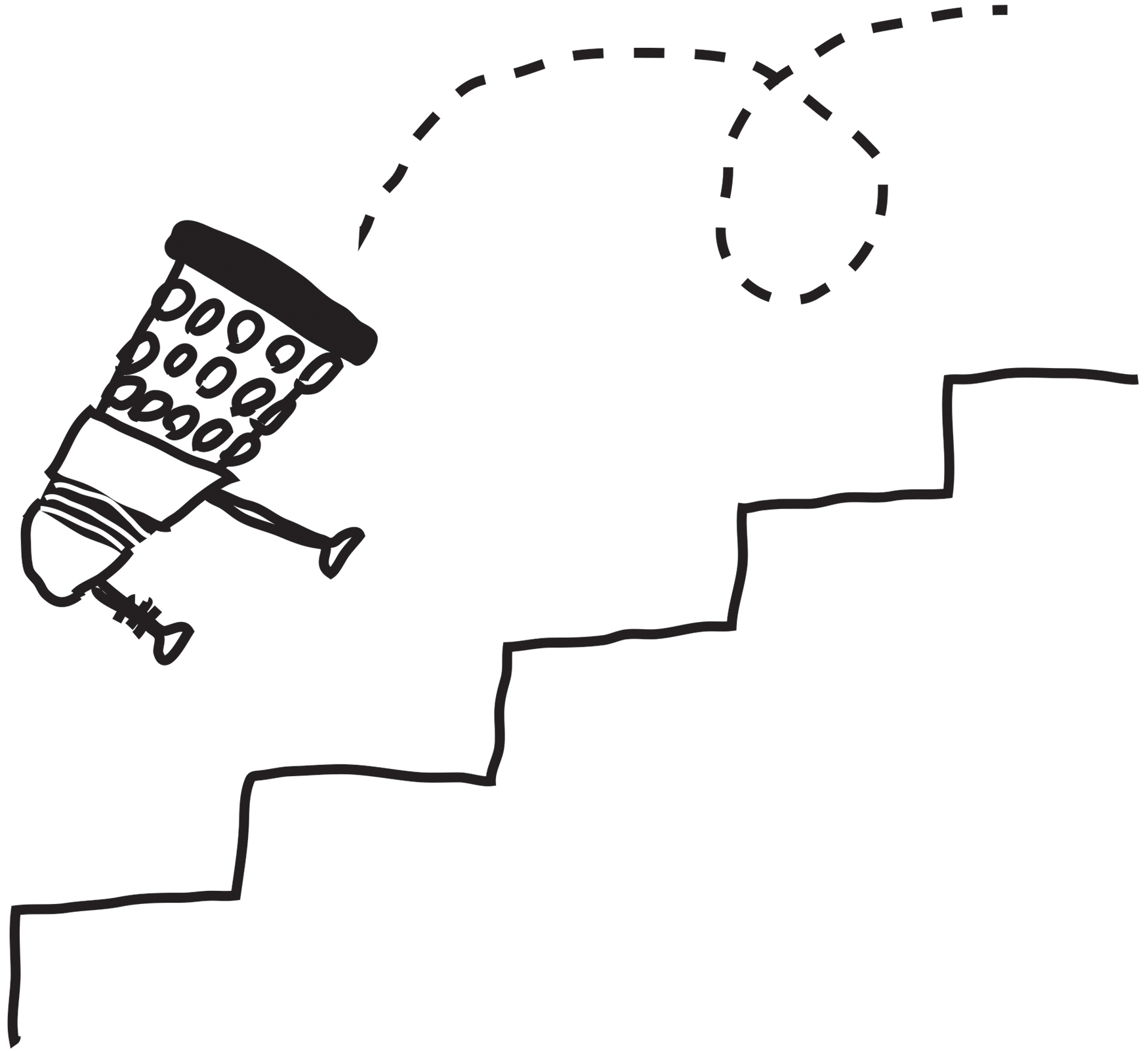

A fatal accident in March 2018 was the result of a situation like this—a self-driving car’s AI had trouble identifying a pedestrian, classifying her first as an unknown object, then as a bicycle, and then finally, with only 1.3 seconds left for braking, as a pedestrian. (The problem was further confounded by the fact that the car’s emergency braking systems were disabled in favor of alerting the car’s backup driver, yet the system was not designed to actually alert the backup driver. The backup driver had also spent many, many hours riding with no intervention needed, a situation that would make the vast majority of humans less than alert.)21 A fatal accident in 2016 also happened because of an obstacle-identification error—in this case, a self-driving car failed to recognize a flatbed truck as an obstacle (see the box on the next page).

That’s not to mention the more unusual situations that can occur. When Volkswagen tested its AI in Australia for the first time, they discovered it was confused by kangaroos. Apparently it had never before encountered anything that hopped.23

Given the sheer variety of things that can happen on a road—parades, escaped emus, downed electrical lines, lava, emergency signs with unusual instructions, molasses floods, and sinkholes—it’s inevitable that something will occur that an AI never saw in training. It’s a tough problem to make an AI that can deal with something completely unexpected—that would know that an escaped emu is likely to run wildly around while a sinkhole will stay put and to understand intuitively that just because lava flows and pools sort of like water does, it doesn’t mean you can drive through a puddle of it.

Car companies are trying to adapt their strategies to the inevitability of mundane glitches or freak weirdness on the road. They’re looking into limiting self-driving cars to closed, controlled routes (this doesn’t necessarily solve the emu problem; they are wily) or having self-driving trucks caravan behind a lead human driver. In other words, the compromises are leading us toward solutions that look very much like mass public transportation.

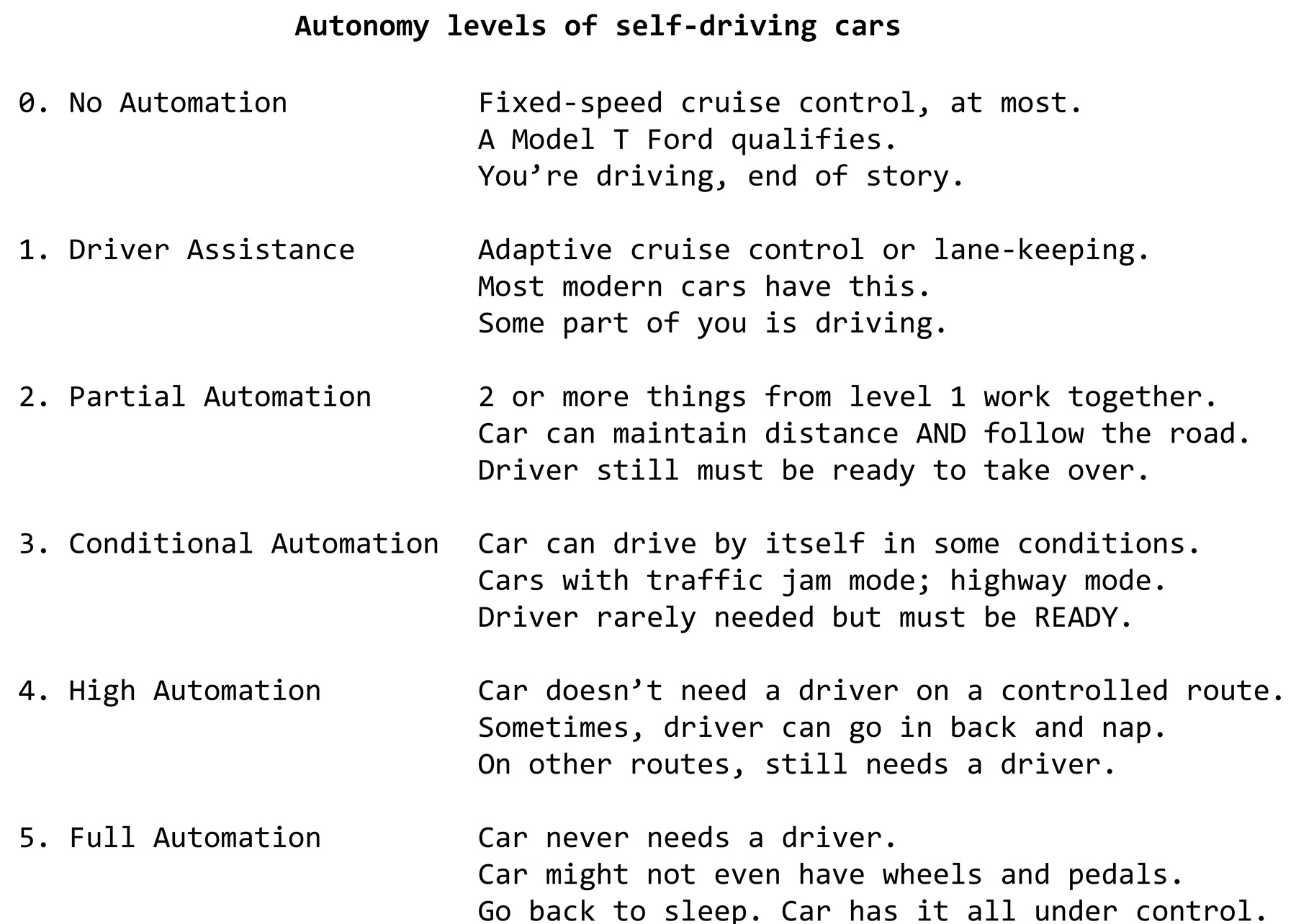

As of right now, when the AIs get confused, they disengage—that is, they suddenly hand control back to the human behind the wheel. Automation level 3, conditional automation, is the highest level of car autonomy commercially available—in Tesla’s autopilot mode, for example, the car can drive for hours unguided, but a human driver can be called to take over at any moment. The problem with this level of automation is that the human had better be behind the wheel and paying attention, not in the back seat decorating cookies. And humans are very, very bad at being alert after boring hours of idly watching the road. Human rescue is often a decent option for bridging the gap between the AI performance we have and the performance we need, but humans are pretty bad at rescuing self-driving cars.

So making self-driving cars is at once an attractive and very difficult AI problem. To get mainstream self-driving cars, we may need to make compromises (like creating controlled routes and sticking with automation level number 4), or we may need AI that’s significantly more flexible than the AI we have now.

In the next chapter, we’ll look at the types of AI that are behind things like self-driving cars—modeled after brains, evolution, and even the game of call my bluff.

CHAPTER 3

How does it actually learn?

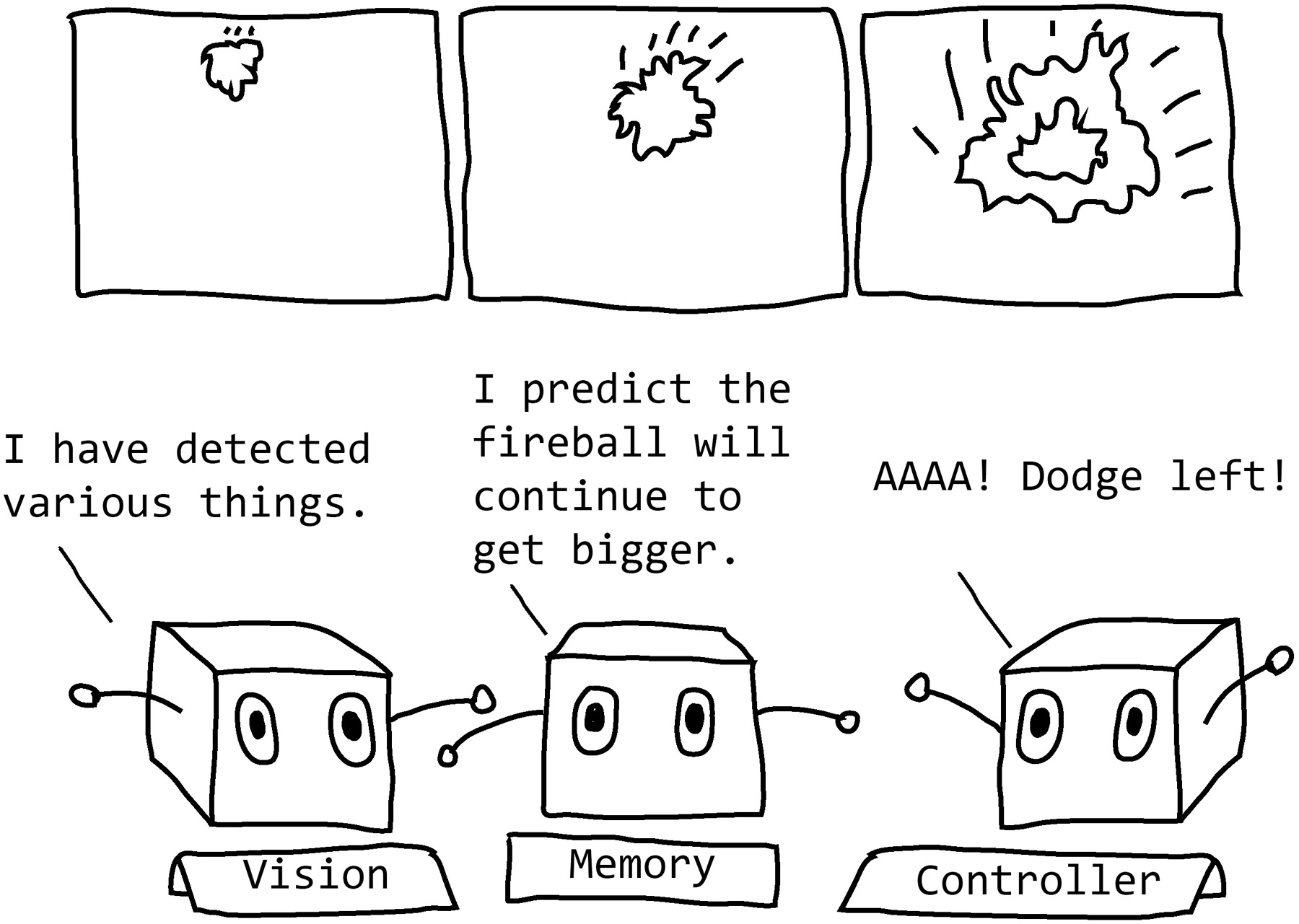

Remember that in this book I’m using the term AI to mean “machine learning programs.” (Refer to the handy chart here for a list of stuff that I am or am not considering to be AI. Sorry, person in a robot suit.) A machine learning program, as I explained in chapter 1, uses trial and error to solve a problem. But how does that process work? How does a program go from producing a jumble of random letters to writing recognizable knock-knock jokes, all without a human telling it how words work or what a joke even is?